Android Automotive OS (AAOS) builds on the core Android audio stack to support the use cases for operating as the infotainment system in a vehicle. AAOS is responsible for infotainment sounds (that is, media, navigation, and communications) but isn't directly responsible for chimes and warnings that have strict availability and timing requirements. While AAOS provides signals and mechanisms to help the vehicle manage audio, in the end it is up to the vehicle to make the call as to what sounds should be played for the driver and passengers, ensuring safety critical sounds and regulatory sounds are properly heard without interruption.

As Android manages the vehicle's media experience, external media sources such as the radio tuner should be represented by apps, which can handle audio focus and media key events for the source.

Android 11 includes the following changes to automotive-related audio support:

- Automatic audio zone selection based on the associated User ID

- New system usages to support automotive-specific sounds

- HAL audio focus support

- Delayed audio focus for non-transient streams

- User setting to control interaction between navigation and calls

Android sounds and streams

Automotive audio systems handle the following sounds and streams:

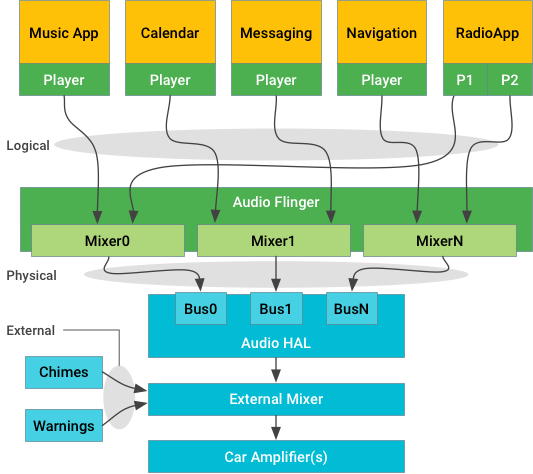

Figure 1. Stream-centric architecture diagram

Android manages the sounds coming from Android apps, controlling those apps and routing their sounds to output devices at the HAL based on the type of sound:

- Logical streams, known as sources in core audio nomenclature, are tagged with Audio Attributes.

- Physical streams, known as devices in core audio nomenclature, have no context information after mixing.

For reliability, external sounds (coming from independent sources such as seatbelt warning chimes) are managed outside Android, below the HAL or even in separate hardware. System implementers must provide a mixer that accepts one or more streams of sound input from Android and then combines those streams in a suitable way with the external sound sources required by the vehicle.

The HAL implementation and external mixer are responsible for ensuring the safety-critical external sounds are heard and for mixing in the Android-provided streams and routing them to suitable speakers.

Android sounds

Apps may have one or more players that interact through the standard Android APIs (for example, AudioManager for focus control or MediaPlayer for streaming) to emit one or more logical streams of audio data. This data could be single channel mono or 7.1 surround, but is routed and treated as a single source. The app stream is associated with AudioAttributes that give the system hints about how the audio should be expressed.

The logical streams are sent through AudioService and routed to one (and only one) of the available physical output streams, each of which is the output of a mixer within AudioFlinger. After the audio attributes have been mixed down to a physical stream, they are no longer available.

Each physical stream is then delivered to the Audio HAL for rendering on the hardware. In automotive apps, rendering hardware can be local codecs (similar to mobile devices) or a remote processor across the vehicle's physical network. Either way, it's the job of the Audio HAL implementation to deliver the actual sample data and cause it to become audible.

External streams

Sound streams that shouldn't be routed through Android (for certification or timing reasons) may be sent directly to the external mixer. As of Android 11, the HAL is now able to request focus for these external sounds to inform Android such that it can take appropriate actions such as pausing media or preventing others from gaining focus.

If external streams are media sources that should interact with the sound environment Android is generating (for example, stop MP3 playback when an external tuner is turned on), those external streams should be represented by an Android app. Such an app would request audio focus on behalf of the media source instead of the HAL, and would respond to focus notifications by starting/stopping the external source as necessary to fit into the Android focus policy. The app is also responsible for handling media key events such as play/pause. One suggested mechanism to control such external devices is HwAudioSource.

Output devices

At the Audio HAL level, the device type AUDIO_DEVICE_OUT_BUS

provides a generic output device for use in vehicle audio systems. The bus

device supports addressable ports (where each port is the end point for a

physical stream) and is expected to be the only supported output device type in

a vehicle.

A system implementation can use one bus port for all Android sounds, in

which case Android mixes everything together and delivers it as one stream.

Alternatively, the HAL can provide one bus port for each CarAudioContext to allow

concurrent delivery of any sound type. This makes it possible for the HAL

implementation to mix and duck the different sounds as desired.

The assignment of audio contexts to output devices is done through

car_audio_configuration.xml.

Microphone input

When capturing audio, the Audio HAL receives an openInputStream

call that includes an AudioSource argument indicating how the

microphone input should be processed.

The VOICE_RECOGNITION source

(specifically the Google Assistant) expects a stereo microphone stream that has

an echo cancellation effect (if available) but no other processing applied to it.

Beamforming is expected to be done by the Assistant.

Multi-channel microphone input

To capture audio from a device with more than two channels (stereo), use a

channel index mask instead of positional index mask (such as

CHANNEL_IN_LEFT). Example:

final AudioFormat audioFormat = new AudioFormat.Builder()

.setEncoding(AudioFormat.ENCODING_PCM_16BIT)

.setSampleRate(44100)

.setChannelIndexMask(0xf /* 4 channels, 0..3 */)

.build();

final AudioRecord audioRecord = new AudioRecord.Builder()

.setAudioFormat(audioFormat)

.build();

audioRecord.setPreferredDevice(someAudioDeviceInfo);When both setChannelMask and setChannelIndexMask

are set, AudioRecord uses only the value set by

setChannelMask (maximum of two channels).

Concurrent capture

As of Android 10, the Android framework supports the concurrent capturing

of inputs, but with restrictions to protect the user's privacy. As part

of these restrictions, virtual sources such as

AUDIO_SOURCE_FM_TUNER are ignored, and as such are allowed to be

captured concurrently along with a regular input (such as the microphone).

HwAudioSources are also not considered as part of concurrent

capture restrictions.

Apps designed to work with AUDIO_DEVICE_IN_BUS devices or with

secondary AUDIO_DEVICE_IN_FM_TUNER devices must rely on explicitly

identifying those devices and using AudioRecord.setPreferredDevice()

to bypass the Android default source selection logic.

Audio usages

AAOS primarily utilizes

AudioAttributes.AttributeUsages

for routing, volume adjustments, and focus management. Usages are a

representation of the "why" the stream is being played. Therefore, all streams

and audio focus requests should specify a usage for their audio playback. When

not specifically set when building an AudioAttributes object, the usage will be

defaulted to USAGE_UNKNOWN. While this is currently treated the same

as USAGE_MEDIA, this behavior should not be relied on for media

playback.

System usages

In Android 11, the system usages were introduced. These usages behave

similarly to the previously established usages, except they require system APIs

to use as well as android.permission.MODIFY_AUDIO_ROUTING. The new

system usages are:

USAGE_EMERGENCYUSAGE_SAFETYUSAGE_VEHICLE_STATUSUSAGE_ANNOUNCEMENT

To construct an AudioAttributes with a system usage, use

AudioAttributes.Builder#setSystemUsage

instead of setUsage. Calling this method with a non-system usage

will result in an IllegalArgumentException being thrown. Also, if

both a system usage and usage have been set on a builder, it will throw an

IllegalArgumentException when building.

To check what usage is associated with an AudioAttributes

instance, call AudioAttributes#getSystemUsage.

This returns the usage or system usage that's associated.

Audio contexts

To simplify configuration of AAOS audio, similar usages have been grouped

into CarAudioContext. These audio contexts are used throughout

CarAudioService to define routing, volume groups, and audio focus

management.

The audio contexts in Android 11 are:

| CarAudioContext | Associated AttributeUsages |

|---|---|

MUSIC |

UNKNOWN, GAME, MEDIA |

NAVIGATION |

ASSISTANCE_NAVIGATION_GUIDANCE |

VOICE_COMMAND |

ASSISTANT, ASSISTANCE_ACCESSIBILITY |

CALL_RING |

NOTIFICATION_RINGTONE |

CALL |

VOICE_COMMUNICATION, VOICE_COMMUNICATION_SIGNALING |

ALARM |

ALARM |

NOTIFICATION |

NOTIFICATION, NOTIFICATION_* |

SYSTEM_SOUND |

ASSISTANCE_SONIFICATION |

EMERGENCY |

EMERGENCY |

SAFETY |

SAFETY |

VEHICLE_STATUS |

VEHICLE_STATUS |

ANNOUNCEMENT |

ANNOUNCEMENT |

Mapping between audio contexts and usages. Highlighted rows are for new system usages.

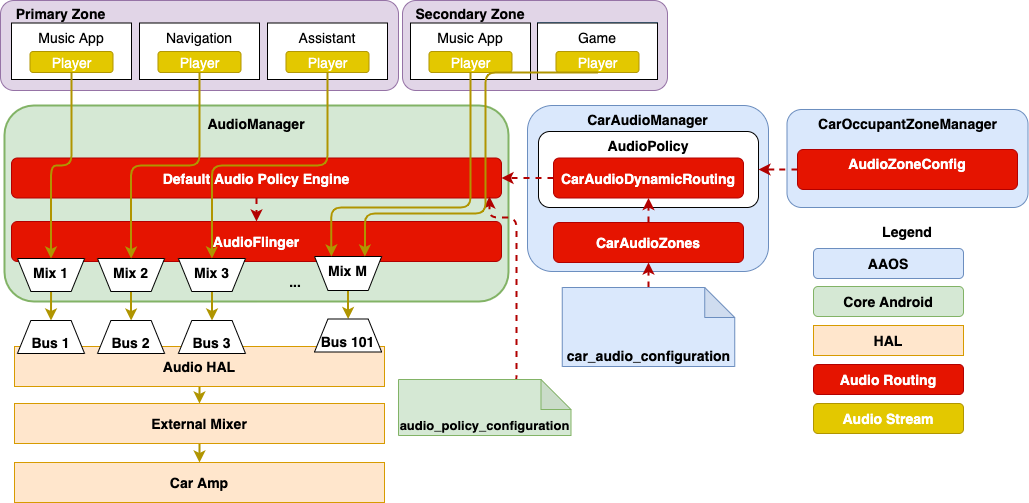

Multi-zone audio

With automotive comes a new set of use cases around concurrent users interacting with the platform and looking to consume separate media. For example, a driver can play music in the cabin while passengers in the back seat are watching a YouTube video on the rear display. Multi-zone audio enables this by allowing different audio sources to play concurrently through different areas of the vehicle.

Multi-zone audio starting in Android 10 enables OEMs to configure audio into separate zones. Each zone is a collection of devices within the vehicle with its own volume groups, routing configuration for contexts, and focus management. In this manner, the main cabin could be configured as one audio zone, while the rear display's headphone jacks be configured as a second zone.

The zones are defined as part of car_audio_configuration.xml.

CarAudioService then reads the configuration and helps AudioService

route audio streams based on their associated zone. Each zone still defines

rules for routing based on contexts and the applications uid. When a player is

created, CarAudioService determines for which zone the player is

associated with, and then based on the usage, which device the AudioFlinger

should route the audio to.

Focus is also maintained independently for each audio zone. This enables

applications in different zones to independently produce audio without

interfering with each other while having applications still respect changes in

focus within their zone. CarZonesAudioFocus within

CarAudioService is responsible for managing focus for each

zone.

Figure 2. Configure multi-zone audio

Audio HAL

Automotive audio implementations rely on the standard Android Audio HAL, which includes the following:

IDevice.hal. Creates input and output streams, handles main volume and muting, and uses:createAudioPatch. To create external-external patches between devices.IDevice.setAudioPortConfig()to provide volume for each physical stream.

IStream.hal. Along with the input and output variants, manages the streaming of audio samples to and from the hardware.

Automotive device types

The following device types are relevant for automotive platforms.

| Device type | Description |

|---|---|

AUDIO_DEVICE_OUT_BUS |

Primary output from Android (this is how all audio from Android is delivered to the vehicle). Used as the address for disambiguating streams for each context. |

AUDIO_DEVICE_OUT_TELEPHONY_TX |

Used for audio routed to the cellular radio for transmission. |

AUDIO_DEVICE_IN_BUS |

Used for inputs not otherwise classified. |

AUDIO_DEVICE_IN_FM_TUNER |

Used only for broadcast radio input. |

AUDIO_DEVICE_IN_TV_TUNER |

Used for a TV device if present. |

AUDIO_DEVICE_IN_LINE |

Used for AUX input jack. |

AUDIO_DEVICE_IN_BLUETOOTH_A2DP |

Music received over Bluetooth. |

AUDIO_DEVICE_IN_TELEPHONY_RX |

Used for audio received from the cellular radio associated with a phone call. |

Configuring audio devices

Audio devices visible to Android must be defined in

/audio_policy_configuration.xml, which includes the following components:

- module name. Supports "primary" (used for automotive use cases),

"A2DP", "remote_submix", and "USB". The module name and the corresponding audio

driver should be compiled to

audio.primary.$(variant).so. - devicePorts. Contains a list of device descriptors for all input and output devices (includes permanently attached devices and removable devices) that can be accessed from this module.

- For each output device, you can define gain control that consists of min/max/default/step values in millibel (1 millibel = 1/100 dB = 1/1000 bel).

- The address attribute on a devicePort instance can be used to find the

device, even if there are multiple devices with the same device type as

AUDIO_DEVICE_OUT_BUS. - mixPorts. Contains a list of all output and input streams exposed by the audio HAL. Each mixPort instance can be considered as a physical stream to Android AudioService.

- routes. Defines a list of possible connections between input and output devices or between stream and device.

The following example defines an output device bus0_phone_out in which all

Android audio streams are mixed by mixer_bus0_phone_out. The route takes the

output stream of mixer_bus0_phone_out to device

bus0_phone_out.

<audioPolicyConfiguration version="1.0" xmlns:xi="http://www.w3.org/2001/XInclude"> <modules> <module name="primary" halVersion="3.0"> <attachedDevices> <item>bus0_phone_out</item> <defaultOutputDevice>bus0_phone_out</defaultOutputDevice> <mixPorts> <mixPort name="mixport_bus0_phone_out" role="source" flags="AUDIO_OUTPUT_FLAG_PRIMARY"> <profile name="" format="AUDIO_FORMAT_PCM_16_BIT" samplingRates="48000" channelMasks="AUDIO_CHANNEL_OUT_STEREO"/> </mixPort> </mixPorts> <devicePorts> <devicePort tagName="bus0_phone_out" role="sink" type="AUDIO_DEVICE_OUT_BUS" address="BUS00_PHONE"> <profile name="" format="AUDIO_FORMAT_PCM_16_BIT" samplingRates="48000" channelMasks="AUDIO_CHANNEL_OUT_STEREO"/> <gains> <gain name="" mode="AUDIO_GAIN_MODE_JOINT" minValueMB="-8400" maxValueMB="4000" defaultValueMB="0" stepValueMB="100"/> </gains> </devicePort> </devicePorts> <routes> <route type="mix" sink="bus0_phone_out" sources="mixport_bus0_phone_out"/> </routes> </module> </modules> </audioPolicyConfiguration>