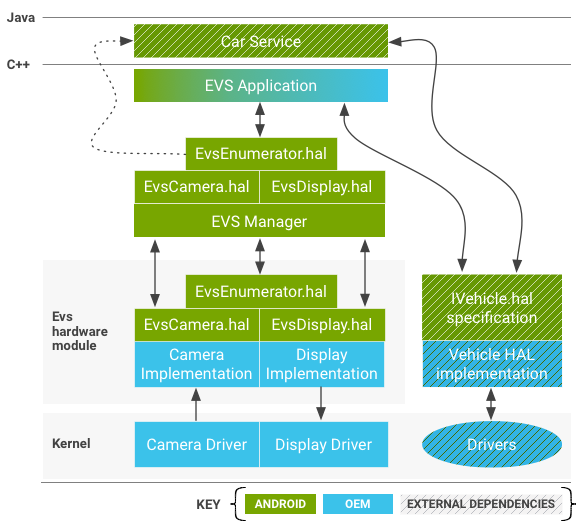

Android содержит автомобильный уровень абстракции оборудования HIDL (HAL), который обеспечивает захват и отображение изображений на самых ранних этапах процесса загрузки Android и продолжает функционировать в течение всего срока службы системы. HAL включает в себя стек системы внешнего обзора (EVS) и обычно используется для поддержки камеры заднего вида и дисплеев объемного обзора в автомобилях с бортовыми информационно-развлекательными системами (IVI) на базе Android. EVS также позволяет реализовать расширенные функции в пользовательских приложениях.

Android также включает интерфейс драйвера захвата и отображения для EVS (в /hardware/interfaces/automotive/evs/1.0 ). Хотя можно создать приложение камеры заднего вида поверх существующих служб камеры и дисплея Android, такое приложение, скорее всего, запустится слишком поздно в процессе загрузки Android. Использование выделенного HAL обеспечивает оптимизированный интерфейс и дает понять, что OEM-производителю необходимо реализовать для поддержки стека EVS.

Системные компоненты

EVS включает в себя следующие компоненты системы:

Приложение EVS

Образец приложения C++ EVS ( /packages/services/Car/evs/app ) служит эталонной реализацией. Это приложение отвечает за запрос видеокадров из EVS Manager и отправку готовых кадров для отображения обратно в EVS Manager. Ожидается, что он будет запущен init, как только станут доступны EVS и Car Service, в течение двух (2) секунд после включения питания. OEM-производители могут изменять или заменять приложение EVS по своему усмотрению.

EVS-менеджер

EVS Manager ( /packages/services/Car/evs/manager ) предоставляет строительные блоки, необходимые приложению EVS для реализации чего угодно, от простого дисплея камеры заднего вида до многокамерного рендеринга с 6 степенями свободы. Его интерфейс представлен через HIDL и рассчитан на работу с несколькими одновременными клиентами. Другие приложения и службы (в частности, Car Service) могут запрашивать состояние EVS Manager, чтобы узнать, когда система EVS активна.

Интерфейс EVS HIDL

Система EVS, как камера, так и элементы дисплея, определены в пакете android.hardware.automotive.evs . Пример реализации, в которой используется интерфейс (генерируются синтетические тестовые изображения и проверяется, проходят ли изображения туда и обратно), находится в /hardware/interfaces/automotive/evs/1.0/default .

OEM-производитель отвечает за реализацию API, представленного файлами .hal в /hardware/interfaces/automotive/evs . Такие реализации отвечают за настройку и сбор данных с физических камер и доставку их через буферы общей памяти, распознаваемые Gralloc. Сторона отображения в реализации отвечает за предоставление буфера разделяемой памяти, который может быть заполнен приложением (обычно посредством рендеринга EGL), и за представление готовых кадров вместо чего-либо еще, что могло бы захотеться появиться на физическом дисплее. Реализации интерфейса EVS от поставщиков могут храниться в папке /vendor/… /device/… или hardware/… (например, /hardware/[vendor]/[platform]/evs ).

Драйверы ядра

Для устройства, поддерживающего стек EVS, требуются драйверы ядра. Вместо создания новых драйверов OEM-производители могут поддерживать функции, необходимые для EVS, с помощью существующих аппаратных драйверов камеры и/или дисплея. Повторное использование драйверов может быть полезным, особенно для драйверов дисплея, где представление изображения может потребовать координации с другими активными потоками. Android 8.0 включает пример драйвера на основе v4l2 (в packages/services/Car/evs/sampleDriver ), который зависит от ядра для поддержки v4l2 и от SurfaceFlinger для представления выходного изображения.

Описание аппаратного интерфейса EVS

Раздел описывает HAL. Ожидается, что поставщики предоставят реализации этого API, адаптированные для их оборудования.

IEvsEnumerator

Этот объект отвечает за перечисление доступного оборудования EVS в системе (одна или несколько камер и одно устройство отображения).

getCameraList() generates (vec<CameraDesc> cameras);

Возвращает вектор, содержащий описания всех камер в системе. Предполагается, что набор камер фиксирован и известен во время загрузки. Дополнительные сведения об описаниях камер см. в разделе CameraDesc .

openCamera(string camera_id) generates (IEvsCamera camera);

Получает объект интерфейса, используемый для взаимодействия с определенной камерой, идентифицируемой уникальной строкой camera_id . Возвращает NULL в случае ошибки. Попытки повторно открыть уже открытую камеру не могут быть неудачными. Чтобы избежать условий гонки, связанных с запуском и завершением работы приложения, повторное открытие камеры должно закрыть предыдущий экземпляр, чтобы можно было выполнить новый запрос. Экземпляр камеры, который был вытеснен таким образом, должен быть переведен в неактивное состояние, ожидая окончательного уничтожения и отвечая на любой запрос на изменение состояния камеры кодом возврата OWNERSHIP_LOST .

closeCamera(IEvsCamera camera);

Освобождает интерфейс IEvsCamera (и является противоположностью openCamera() ). Видеопоток камеры должен быть остановлен вызовом stopVideoStream() перед вызовом closeCamera .

openDisplay() generates (IEvsDisplay display);

Получает объект интерфейса, используемый исключительно для взаимодействия с дисплеем системы EVS. Только один клиент может одновременно содержать функциональный экземпляр IEvsDisplay. Подобно агрессивному поведению открытия, описанному в openCamera , новый объект IEvsDisplay может быть создан в любое время и отключит все предыдущие экземпляры. Недействительные экземпляры продолжают существовать и отвечать на вызовы функций от своих владельцев, но не должны выполнять никаких операций по изменению после смерти. В конце концов ожидается, что клиентское приложение заметит коды возврата ошибки OWNERSHIP_LOST и закроет и освободит неактивный интерфейс.

closeDisplay(IEvsDisplay display);

Освобождает интерфейс IEvsDisplay (и является противоположностью openDisplay() ). Незавершенные буферы, полученные с помощью getTargetBuffer() , должны быть возвращены на дисплей перед закрытием дисплея.

getDisplayState() generates (DisplayState state);

Получает текущее состояние отображения. Реализация HAL должна сообщать о фактическом текущем состоянии, которое может отличаться от самого последнего запрошенного состояния. Логика, отвечающая за изменение состояния отображения, должна существовать выше уровня устройства, что делает нежелательным самопроизвольное изменение состояния отображения реализацией HAL. Если дисплей в настоящее время не удерживается ни одним клиентом (вызовом openDisplay), то эта функция возвращает NOT_OPEN . В противном случае он сообщает о текущем состоянии дисплея EVS (см. IEvsDisplay API ).

struct CameraDesc {

string camera_id;

int32 vendor_flags; // Opaque value

}

-

camera_id. Строка, однозначно идентифицирующая данную камеру. Может быть именем устройства ядра устройства или именем устройства, например, reverseview . Значение для этой строки выбирается реализацией HAL и непрозрачно используется стеком выше. -

vendor_flags. Метод непрозрачной передачи специализированной информации о камере от драйвера к пользовательскому приложению EVS. Он передается в неинтерпретированном виде от драйвера до приложения EVS, которое может его игнорировать.

IEvsCamera

Этот объект представляет одну камеру и является основным интерфейсом для захвата изображений.

getCameraInfo() generates (CameraDesc info);

Возвращает CameraDesc этой камеры.

setMaxFramesInFlight(int32 bufferCount) generates (EvsResult result);

Определяет глубину цепочки буферов, которую камера должна поддерживать. Вплоть до этого количество кадров может одновременно храниться клиентом IEvsCamera. Если это количество кадров было доставлено получателю без возврата с помощью doneWithFrame , поток пропускает кадры до тех пор, пока не будет возвращен буфер для повторного использования. Этот вызов может поступать в любое время, даже когда потоки уже запущены, и в этом случае буферы должны быть добавлены или удалены из цепочки по мере необходимости. Если к этой точке входа не выполняется никаких вызовов, IEvsCamera по умолчанию поддерживает хотя бы один кадр; с более приемлемым.

Если запрошенный bufferCount не может быть размещен, функция возвращает BUFFER_NOT_AVAILABLE или другой соответствующий код ошибки. В этом случае система продолжает работать с ранее установленным значением.

startVideoStream(IEvsCameraStream receiver) generates (EvsResult result);

Запрашивает доставку кадров камеры EVS с этой камеры. IEvsCameraStream начинает получать периодические вызовы с новыми кадрами изображения, пока stopVideoStream() . Кадры должны начать доставляться в течение 500 мс после вызова startVideoStream и после запуска должны генерироваться со скоростью не менее 10 кадров в секунду. Время, необходимое для запуска видеопотока, эффективно учитывается при любом требовании времени запуска камеры заднего вида. Если поток не запущен, должен быть возвращен код ошибки; в противном случае возвращается OK.

oneway doneWithFrame(BufferDesc buffer);

Возвращает кадр, который был доставлен в IEvsCameraStream. После завершения использования кадра, доставленного в интерфейс IEvsCameraStream, этот кадр необходимо вернуть в IEvsCamera для повторного использования. Доступно небольшое конечное число буферов (возможно, всего один), и если запас исчерпан, дальнейшие кадры не доставляются до тех пор, пока буфер не будет возвращен, что может привести к пропуску кадров (буфер с нулевым дескриптором обозначает конец буфера). потока и его не нужно возвращать через эту функцию). Возвращает OK в случае успеха или соответствующий код ошибки, потенциально включающий INVALID_ARG или BUFFER_NOT_AVAILABLE .

stopVideoStream();

Останавливает доставку кадров камеры EVS. Поскольку доставка является асинхронной, кадры могут продолжать поступать в течение некоторого времени после возврата этого вызова. Каждый кадр должен быть возвращен до тех пор, пока о закрытии потока не будет сообщено IEvsCameraStream. Допустимо вызывать stopVideoStream для потока, который уже был остановлен или никогда не запускался, и в этом случае он игнорируется.

getExtendedInfo(int32 opaqueIdentifier) generates (int32 value);

Запрашивает специфичную для драйвера информацию от реализации HAL. Значения, разрешенные для opaqueIdentifier , зависят от драйвера, но отсутствие переданного значения может привести к сбою драйвера. Драйвер должен возвращать 0 для любого нераспознанного opaqueIdentifier .

setExtendedInfo(int32 opaqueIdentifier, int32 opaqueValue) generates (EvsResult result);

Отправляет специфичное для драйвера значение в реализацию HAL. Это расширение предоставляется только для облегчения расширений для конкретных транспортных средств, и никакая реализация HAL не должна требовать, чтобы этот вызов функционировал в состоянии по умолчанию. Если драйвер распознает и принимает значения, должно быть возвращено OK; в противном случае должен быть возвращен INVALID_ARG или другой репрезентативный код ошибки.

struct BufferDesc {

uint32 width; // Units of pixels

uint32 height; // Units of pixels

uint32 stride; // Units of pixels

uint32 pixelSize; // Size of single pixel in bytes

uint32 format; // May contain values from android_pixel_format_t

uint32 usage; // May contain values from Gralloc.h

uint32 bufferId; // Opaque value

handle memHandle; // gralloc memory buffer handle

}

Описывает изображение, переданное через API. Диск HAL отвечает за заполнение этой структуры для описания буфера изображения, и клиент HAL должен рассматривать эту структуру как доступную только для чтения. Поля содержат достаточно информации, чтобы позволить клиенту восстановить объект ANativeWindowBuffer , что может потребоваться для использования изображения с EGL через расширение eglCreateImageKHR() .

-

width. Ширина представленного изображения в пикселях. -

height. Высота представленного изображения в пикселях. -

stride. Количество пикселей, которое каждая строка фактически занимает в памяти, с учетом любого заполнения для выравнивания строк. Выражается в пикселях, чтобы соответствовать соглашению, принятому galloc для описания своих буферов. -

pixelSize. Количество байтов, занимаемых каждым отдельным пикселем, что позволяет вычислить размер в байтах, необходимый для перехода между строками изображения (strideв байтах =strideв пикселях *pixelSize). -

format. Формат пикселей, используемый изображением. Предоставленный формат должен быть совместим с реализацией OpenGL платформы. Чтобы пройти тест на совместимость,HAL_PIXEL_FORMAT_YCRCB_420_SPдолжен быть предпочтительным для использования камеры, аRGBAилиBGRA— для отображения. -

usage. Флаги использования, установленные реализацией HAL. Ожидается, что клиенты HAL будут передавать их без изменений (подробности см. в описании флагов, связанных сGralloc.h). -

bufferId. Уникальное значение, указанное реализацией HAL, позволяющее распознавать буфер после кругового обхода через HAL API. Значение, хранящееся в этом поле, может быть произвольно выбрано реализацией HAL. -

memHandle. Дескриптор базового буфера памяти, содержащего данные изображения. Реализация HAL может хранить здесь дескриптор буфера Gralloc.

IEvsCameraStream

Клиент реализует этот интерфейс для получения асинхронных доставок видеокадров.

deliverFrame(BufferDesc buffer);

Получает вызовы от HAL каждый раз, когда видеокадр готов к проверке. Дескрипторы буфера, полученные этим методом, должны быть возвращены через вызовы IEvsCamera::doneWithFrame() . Когда видеопоток останавливается с помощью вызова IEvsCamera::stopVideoStream() , этот обратный вызов может продолжаться по мере истощения конвейера. Каждый кадр все равно должен быть возвращен; когда будет доставлен последний кадр в потоке, будет доставлен NULL bufferHandle, означающий конец потока и дальнейшая доставка кадров не происходит. Сам NULL bufferHandle не нужно отправлять обратно через doneWithFrame() , но все остальные дескрипторы должны быть возвращены.

Хотя технически возможны проприетарные форматы буферов, для проверки совместимости требуется, чтобы буфер был в одном из четырех поддерживаемых форматов: NV21 (YCrCb 4:2:0 Semi-Planar), YV12 (YCrCb 4:2:0 Planar), YUYV (YCrCb 4: 2:2 с чередованием), RGBA (32 бита R:G:B:x), BGRA (32 бита B:G:R:x). Выбранный формат должен быть допустимым источником текстуры GL в реализации GLES платформы.

Приложение не должно полагаться на какое-либо соответствие между полем bufferId и memHandle в структуре BufferDesc . Значения bufferId по существу закрыты для реализации драйвера HAL, и он может использовать (и повторно использовать) их по своему усмотрению.

IEvsDisplay

Этот объект представляет дисплей Evs, управляет состоянием дисплея и обрабатывает фактическое представление изображений.

getDisplayInfo() generates (DisplayDesc info);

Возвращает основную информацию об отображении EVS, предоставленном системой (см. DisplayDesc ).

setDisplayState(DisplayState state) generates (EvsResult result);

Устанавливает состояние отображения. Клиенты могут установить состояние отображения, чтобы выразить желаемое состояние, и реализация HAL должна изящно принять запрос для любого состояния, находясь в любом другом состоянии, хотя ответ может заключаться в игнорировании запроса.

После инициализации отображение запускается в состоянии NOT_VISIBLE , после чего ожидается, что клиент запросит состояние VISIBLE_ON_NEXT_FRAME и начнет предоставлять видео. Когда дисплей больше не требуется, ожидается, что клиент запросит состояние NOT_VISIBLE после передачи последнего видеокадра.

Это действительно для любого состояния, которое может быть запрошено в любое время. Если дисплей уже виден, он должен оставаться видимым, если установлено значение VISIBLE_ON_NEXT_FRAME . Всегда возвращает OK, если запрошенное состояние не является нераспознанным значением перечисления, и в этом случае возвращается INVALID_ARG .

getDisplayState() generates (DisplayState state);

Получает состояние отображения. Реализация HAL должна сообщать о фактическом текущем состоянии, которое может отличаться от самого последнего запрошенного состояния. Логика, отвечающая за изменение состояния отображения, должна существовать выше уровня устройства, что делает нежелательным самопроизвольное изменение состояния отображения реализацией HAL.

getTargetBuffer() generates (handle bufferHandle);

Возвращает дескриптор буфера кадров, связанного с дисплеем. Этот буфер может быть заблокирован и записан программным обеспечением и/или GL. Этот буфер должен быть возвращен через вызов returnTargetBufferForDisplay() , даже если дисплей больше не виден.

Хотя технически возможны проприетарные форматы буферов, для проверки совместимости требуется, чтобы буфер был в одном из четырех поддерживаемых форматов: NV21 (YCrCb 4:2:0 Semi-Planar), YV12 (YCrCb 4:2:0 Planar), YUYV (YCrCb 4: 2:2 с чередованием), RGBA (32 бита R:G:B:x), BGRA (32 бита B:G:R:x). Выбранный формат должен быть действительным целевым объектом рендеринга GL в реализации GLES платформы.

При ошибке возвращается буфер с нулевым дескриптором, но такой буфер не нужно передавать обратно в returnTargetBufferForDisplay .

returnTargetBufferForDisplay(handle bufferHandle) generates (EvsResult result);

Сообщает дисплею, что буфер готов к отображению. Только буферы, полученные с помощью вызова getTargetBuffer() , допустимы для использования с этим вызовом, и клиентское приложение не может изменять содержимое BufferDesc . После этого вызова буфер больше не может использоваться клиентом. Возвращает OK в случае успеха или соответствующий код ошибки, потенциально включающий INVALID_ARG или BUFFER_NOT_AVAILABLE .

struct DisplayDesc {

string display_id;

int32 vendor_flags; // Opaque value

}

Описывает основные свойства дисплея EVS, необходимые для реализации EVS. HAL отвечает за заполнение этой структуры для описания дисплея EVS. Это может быть физический дисплей или виртуальный дисплей, который накладывается или смешивается с другим презентационным устройством.

-

display_id. Строка, однозначно идентифицирующая дисплей. Это может быть имя устройства ядра или имя устройства, например, reverseview . Значение для этой строки выбирается реализацией HAL и непрозрачно используется стеком выше. -

vendor_flags. Метод непрозрачной передачи специализированной информации о камере от драйвера к пользовательскому приложению EVS. Он передается от драйвера в неинтерпретированном виде до приложения EVS, которое может его игнорировать.

enum DisplayState : uint32 {

NOT_OPEN, // Display has not been “opened” yet

NOT_VISIBLE, // Display is inhibited

VISIBLE_ON_NEXT_FRAME, // Will become visible with next frame

VISIBLE, // Display is currently active

DEAD, // Display is not available. Interface should be closed

}

Описывает состояние дисплея EVS, который может быть отключен (не виден водителю) или включен (показ изображения водителю). Включает переходное состояние, в котором дисплей еще не виден, но готов стать видимым с доставкой следующего кадра изображения посредством returnTargetBufferForDisplay() .

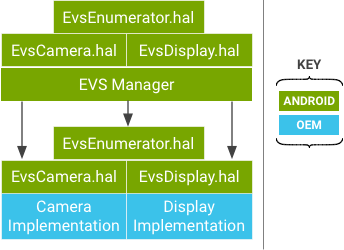

EVS-менеджер

EVS Manager предоставляет общедоступный интерфейс к системе EVS для сбора и представления изображений с внешних камер. Если драйверы оборудования допускают только один активный интерфейс для каждого ресурса (камеры или дисплея), EVS Manager упрощает общий доступ к камерам. Одно основное приложение EVS является первым клиентом EVS Manager и единственным клиентом, которому разрешено записывать отображаемые данные (дополнительным клиентам может быть предоставлен доступ только для чтения к изображениям с камер).

EVS Manager реализует тот же API, что и базовые драйверы HAL, и предоставляет расширенные услуги за счет поддержки нескольких одновременных клиентов (более одного клиента могут открывать камеру через EVS Manager и получать видеопоток).

Приложения не видят различий при работе с аппаратной реализацией EVS HAL или API-интерфейсом EVS Manager, за исключением того, что API-интерфейс EVS Manager обеспечивает одновременный доступ к потоку камеры. EVS Manager сам по себе является единственным разрешенным клиентом уровня HAL оборудования EVS и действует как прокси для HAL оборудования EVS.

Следующие разделы описывают только те вызовы, которые имеют другое (расширенное) поведение в реализации EVS Manager; остальные вызовы идентичны описаниям EVS HAL.

IEvsEnumerator

openCamera(string camera_id) generates (IEvsCamera camera);

Получает объект интерфейса, используемый для взаимодействия с определенной камерой, идентифицируемой уникальной строкой camera_id . Возвращает NULL в случае ошибки. На уровне EVS Manager, если доступны достаточные системные ресурсы, уже открытая камера может быть снова открыта другим процессом, что позволяет передавать видеопоток нескольким потребительским приложениям. camera_id на уровне диспетчера EVS такие же, как и на уровне оборудования EVS.

IEvsCamera

EVS Manager обеспечивает реализацию IEvsCamera с внутренней виртуализацией, поэтому операции с камерой одним клиентом не влияют на других клиентов, которые сохраняют независимый доступ к своим камерам.

startVideoStream(IEvsCameraStream receiver) generates (EvsResult result);

Запускает видеопотоки. Клиенты могут независимо запускать и останавливать видеопотоки на одной и той же основной камере. Базовая камера запускается при запуске первого клиента.

doneWithFrame(uint32 frameId, handle bufferHandle) generates (EvsResult result);

Возвращает кадр. Каждый клиент должен вернуть свои кадры, когда они будут готовы, но ему разрешено удерживать свои кадры столько, сколько они пожелают. Когда количество кадров, удерживаемых клиентом, достигает настроенного предела, он больше не будет получать кадры, пока не вернет один. Этот пропуск кадров не влияет на других клиентов, которые продолжают получать все кадры, как и ожидалось.

stopVideoStream();

Останавливает видеопоток. Каждый клиент может остановить свой видеопоток в любое время, не затрагивая других клиентов. Базовый поток камеры на аппаратном уровне останавливается, когда последний клиент данной камеры останавливает свой поток.

setExtendedInfo(int32 opaqueIdentifier, int32 opaqueValue) generates (EvsResult result);

Отправляет специфичное для драйвера значение, потенциально позволяя одному клиенту воздействовать на другого клиента. Поскольку EVS Manager не может понять последствия определяемых поставщиком управляющих слов, они не виртуализируются, и любые побочные эффекты применяются ко всем клиентам данной камеры. Например, если поставщик использовал этот вызов для изменения частоты кадров, все клиенты затронутой камеры аппаратного уровня будут получать кадры с новой частотой.

IEvsDisplay

Допускается только один владелец дисплея, даже на уровне EVS Manager. Диспетчер не добавляет функциональности и просто передает интерфейс IEvsDisplay напрямую через базовую реализацию HAL.

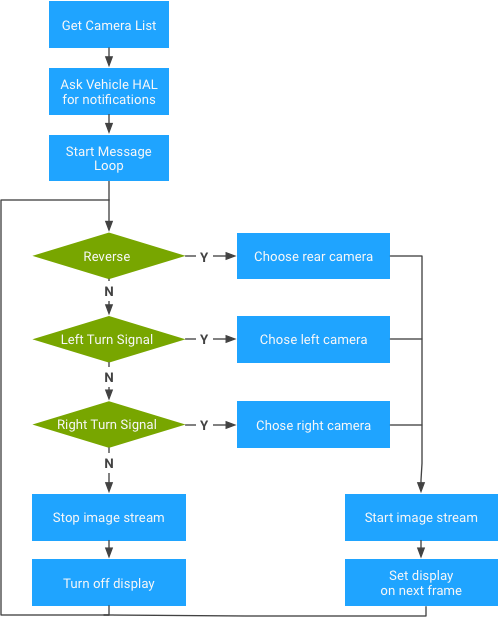

Приложение EVS

Android включает нативную эталонную реализацию приложения EVS на C++, которое взаимодействует с EVS Manager и Vehicle HAL для обеспечения основных функций камеры заднего вида. Ожидается, что приложение запустится очень рано в процессе загрузки системы с отображением подходящего видео в зависимости от доступных камер и состояния автомобиля (состояние передачи и сигнала поворота). OEM-производители могут изменить или заменить приложение EVS своей собственной логикой и представлением для конкретного автомобиля.

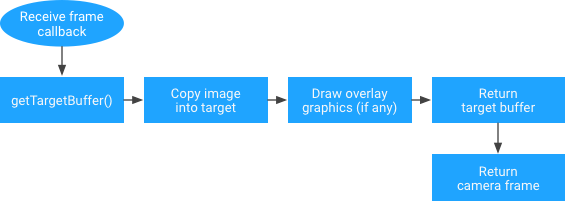

Поскольку данные изображения представляются приложению в стандартном графическом буфере, приложение отвечает за перемещение изображения из исходного буфера в выходной буфер. Хотя это увеличивает стоимость копии данных, оно также дает приложению возможность отображать изображение в буфере дисплея любым удобным для него способом.

Например, приложение может выбрать перемещение самих пиксельных данных, возможно, с помощью встроенного масштабирования или операции поворота. Приложение также может использовать исходное изображение в качестве текстуры OpenGL и визуализировать сложную сцену в выходной буфер, включая виртуальные элементы, такие как значки, направляющие и анимацию. Более сложное приложение также может выбрать несколько одновременных входных камер и объединить их в один выходной кадр (например, для использования в виртуальном виде сверху вниз на окрестности автомобиля).

Используйте EGL/SurfaceFlinger в EVS Display HAL

В этом разделе объясняется, как использовать EGL для визуализации реализации EVS Display HAL в Android 10.

Эталонная реализация EVS HAL использует EGL для рендеринга предварительного просмотра камеры на экране и использует libgui для создания целевой поверхности рендеринга EGL. В Android 8 (и выше) libgui классифицируется как VNDK-private , что относится к группе библиотек, доступных для библиотек VNDK, которые не могут использовать процессы поставщиков. Поскольку реализации HAL должны находиться в разделе поставщика, поставщики не могут использовать Surface в реализациях HAL.

Создание libgui для процессов поставщика

Использование libgui служит единственным вариантом использования EGL/SurfaceFlinger в реализациях EVS Display HAL. Самый простой способ реализовать libgui — напрямую через frameworks/native/libs/gui , используя дополнительную цель сборки в скрипте сборки. Эта цель точно такая же, как цель libgui , за исключением добавления двух полей:

-

name -

vendor_available

cc_library_shared {

name: "libgui_vendor",

vendor_available: true,

vndk: {

enabled: false,

},

double_loadable: true,

defaults: ["libgui_bufferqueue-defaults"],

srcs: [

…

// bufferhub is not used when building libgui for vendors

target: {

vendor: {

cflags: [

"-DNO_BUFFERHUB",

"-DNO_INPUT",

],

…

Примечание. Цели поставщика создаются с помощью макроса NO_INPUT , который удаляет одно 32-битное слово из данных посылки. Поскольку SurfaceFlinger ожидает, что это поле было удалено, SurfaceFlinger не может проанализировать посылку. Это наблюдается как сбой fcntl :

W Parcel : Attempt to read object from Parcel 0x78d9cffad8 at offset 428 that is not in the object list E Parcel : fcntl(F_DUPFD_CLOEXEC) failed in Parcel::read, i is 0, fds[i] is 0, fd_count is 20, error: Unknown error 2147483647 W Parcel : Attempt to read object from Parcel 0x78d9cffad8 at offset 544 that is not in the object list

Чтобы устранить это условие:

diff --git a/libs/gui/LayerState.cpp b/libs/gui/LayerState.cpp

index 6066421fa..25cf5f0ce 100644

--- a/libs/gui/LayerState.cpp

+++ b/libs/gui/LayerState.cpp

@@ -54,6 +54,9 @@ status_t layer_state_t::write(Parcel& output) const

output.writeFloat(color.b);

#ifndef NO_INPUT

inputInfo.write(output);

+#else

+ // Write a dummy 32-bit word.

+ output.writeInt32(0);

#endif

output.write(transparentRegion);

output.writeUint32(transform);

Примеры инструкций по сборке приведены ниже. Ожидайте получить $(ANDROID_PRODUCT_OUT)/system/lib64/libgui_vendor.so .

$ cd <your_android_source_tree_top> $ . ./build/envsetup. $ lunch <product_name>-<build_variant> ============================================ PLATFORM_VERSION_CODENAME=REL PLATFORM_VERSION=10 TARGET_PRODUCT=<product_name> TARGET_BUILD_VARIANT=<build_variant> TARGET_BUILD_TYPE=release TARGET_ARCH=arm64 TARGET_ARCH_VARIANT=armv8-a TARGET_CPU_VARIANT=generic TARGET_2ND_ARCH=arm TARGET_2ND_ARCH_VARIANT=armv7-a-neon TARGET_2ND_CPU_VARIANT=cortex-a9 HOST_ARCH=x86_64 HOST_2ND_ARCH=x86 HOST_OS=linux HOST_OS_EXTRA=<host_linux_version> HOST_CROSS_OS=windows HOST_CROSS_ARCH=x86 HOST_CROSS_2ND_ARCH=x86_64 HOST_BUILD_TYPE=release BUILD_ID=QT OUT_DIR=out ============================================

$ m -j libgui_vendor … $ find $ANDROID_PRODUCT_OUT/system -name "libgui_vendor*" .../out/target/product/hawk/system/lib64/libgui_vendor.so .../out/target/product/hawk/system/lib/libgui_vendor.so

Использование связующего в реализации EVS HAL

В Android 8 (и более поздних версиях) узел устройства /dev/binder стал эксклюзивным для процессов платформы и, следовательно, недоступным для процессов поставщика. Вместо этого процессы поставщика должны использовать /dev/hwbinder и должны преобразовывать все интерфейсы AIDL в HIDL. Для тех, кто хочет продолжать использовать интерфейсы AIDL между процессами поставщиков, используйте домен /dev/vndbinder .

| IPC-домен | Описание |

|---|---|

/dev/binder | IPC между процессами платформы/приложения с интерфейсами AIDL |

/dev/hwbinder | IPC между процессами платформы/поставщика с интерфейсами HIDL IPC между процессами поставщиков с интерфейсами HIDL |

/dev/vndbinder | IPC между процессами поставщика/поставщика с интерфейсами AIDL |

В то время как SurfaceFlinger определяет интерфейсы AIDL, процессы поставщика могут использовать только интерфейсы HIDL для связи с процессами платформы. Требуется нетривиальный объем работы для преобразования существующих интерфейсов AIDL в HIDL. К счастью, Android предоставляет метод выбора драйвера связывателя для libbinder , с которым связаны процессы библиотеки пользовательского пространства.

diff --git a/evs/sampleDriver/service.cpp b/evs/sampleDriver/service.cpp

index d8fb3166..5fd02935 100644

--- a/evs/sampleDriver/service.cpp

+++ b/evs/sampleDriver/service.cpp

@@ -21,6 +21,7 @@

#include <utils/Errors.h>

#include <utils/StrongPointer.h>

#include <utils/Log.h>

+#include <binder/ProcessState.h>

#include "ServiceNames.h"

#include "EvsEnumerator.h"

@@ -43,6 +44,9 @@ using namespace android;

int main() {

ALOGI("EVS Hardware Enumerator service is starting");

+ // Use /dev/binder for SurfaceFlinger

+ ProcessState::initWithDriver("/dev/binder");

+

// Start a thread to listen to video device addition events.

std::atomic<bool> running { true };

std::thread ueventHandler(EvsEnumerator::EvsUeventThread, std::ref(running));

Примечание. Процессы поставщиков должны вызывать это перед вызовом Process или IPCThreadState или перед выполнением каких-либо вызовов связывателя.

Политики SELinux

Если реализация устройства является полной, SELinux запрещает процессам поставщика использовать /dev/binder . Например, пример реализации EVS HAL назначен домену hal_evs_driver и требует разрешений r/w для домена binder_device .

W ProcessState: Opening '/dev/binder' failed: Permission denied

F ProcessState: Binder driver could not be opened. Terminating.

F libc : Fatal signal 6 (SIGABRT), code -1 (SI_QUEUE) in tid 9145 (android.hardwar), pid 9145 (android.hardwar)

W android.hardwar: type=1400 audit(0.0:974): avc: denied { read write } for name="binder" dev="tmpfs" ino=2208 scontext=u:r:hal_evs_driver:s0 tcontext=u:object_r:binder_device:s0 tclass=chr_file permissive=0

Однако добавление этих разрешений приводит к сбою сборки, поскольку нарушает следующие правила neverallow, определенные в system/sepolicy/domain.te для устройства с полным тройным разрешением.

libsepol.report_failure: neverallow on line 631 of system/sepolicy/public/domain.te (or line 12436 of policy.conf) violated by allow hal_evs_driver binder_device:chr_file { read write };

libsepol.check_assertions: 1 neverallow failures occurred

full_treble_only(`

neverallow {

domain

-coredomain

-appdomain

-binder_in_vendor_violators

} binder_device:chr_file rw_file_perms;

')

binder_in_vendor_violators — это атрибут, предназначенный для выявления ошибок и направления разработки. Его также можно использовать для устранения нарушения Android 10, описанного выше.

diff --git a/evs/sepolicy/evs_driver.te b/evs/sepolicy/evs_driver.te index f1f31e9fc..6ee67d88e 100644 --- a/evs/sepolicy/evs_driver.te +++ b/evs/sepolicy/evs_driver.te @@ -3,6 +3,9 @@ type hal_evs_driver, domain, coredomain; hal_server_domain(hal_evs_driver, hal_evs) hal_client_domain(hal_evs_driver, hal_evs) +# Allow to use /dev/binder +typeattribute hal_evs_driver binder_in_vendor_violators; + # allow init to launch processes in this context type hal_evs_driver_exec, exec_type, file_type, system_file_type; init_daemon_domain(hal_evs_driver)

Построение эталонной реализации EVS HAL в качестве процесса поставщика

В качестве справки вы можете внести следующие изменения в packages/services/Car/evs/Android.mk . Обязательно убедитесь, что все описанные изменения работают для вашей реализации.

diff --git a/evs/sampleDriver/Android.mk b/evs/sampleDriver/Android.mk

index 734feea7d..0d257214d 100644

--- a/evs/sampleDriver/Android.mk

+++ b/evs/sampleDriver/Android.mk

@@ -16,7 +16,7 @@ LOCAL_SRC_FILES := \

LOCAL_SHARED_LIBRARIES := \

android.hardware.automotive.evs@1.0 \

libui \

- libgui \

+ libgui_vendor \

libEGL \

libGLESv2 \

libbase \

@@ -33,6 +33,7 @@ LOCAL_SHARED_LIBRARIES := \

LOCAL_INIT_RC := android.hardware.automotive.evs@1.0-sample.rc

LOCAL_MODULE := android.hardware.automotive.evs@1.0-sample

+LOCAL_PROPRIETARY_MODULE := true

LOCAL_MODULE_TAGS := optional

LOCAL_STRIP_MODULE := keep_symbols

@@ -40,6 +41,7 @@ LOCAL_STRIP_MODULE := keep_symbols

LOCAL_CFLAGS += -DLOG_TAG=\"EvsSampleDriver\"

LOCAL_CFLAGS += -DGL_GLEXT_PROTOTYPES -DEGL_EGLEXT_PROTOTYPES

LOCAL_CFLAGS += -Wall -Werror -Wunused -Wunreachable-code

+LOCAL_CFLAGS += -Iframeworks/native/include

# NOTE: It can be helpful, while debugging, to disable optimizations

#LOCAL_CFLAGS += -O0 -g

diff --git a/evs/sampleDriver/service.cpp b/evs/sampleDriver/service.cpp

index d8fb31669..5fd029358 100644

--- a/evs/sampleDriver/service.cpp

+++ b/evs/sampleDriver/service.cpp

@@ -21,6 +21,7 @@

#include <utils/Errors.h>

#include <utils/StrongPointer.h>

#include <utils/Log.h>

+#include <binder/ProcessState.h>

#include "ServiceNames.h"

#include "EvsEnumerator.h"

@@ -43,6 +44,9 @@ using namespace android;

int main() {

ALOGI("EVS Hardware Enumerator service is starting");

+ // Use /dev/binder for SurfaceFlinger

+ ProcessState::initWithDriver("/dev/binder");

+

// Start a thread to listen video device addition events.

std::atomic<bool> running { true };

std::thread ueventHandler(EvsEnumerator::EvsUeventThread, std::ref(running));

diff --git a/evs/sepolicy/evs_driver.te b/evs/sepolicy/evs_driver.te

index f1f31e9fc..632fc7337 100644

--- a/evs/sepolicy/evs_driver.te

+++ b/evs/sepolicy/evs_driver.te

@@ -3,6 +3,9 @@ type hal_evs_driver, domain, coredomain;

hal_server_domain(hal_evs_driver, hal_evs)

hal_client_domain(hal_evs_driver, hal_evs)

+# allow to use /dev/binder

+typeattribute hal_evs_driver binder_in_vendor_violators;

+

# allow init to launch processes in this context

type hal_evs_driver_exec, exec_type, file_type, system_file_type;

init_daemon_domain(hal_evs_driver)

@@ -22,3 +25,7 @@ allow hal_evs_driver ion_device:chr_file r_file_perms;

# Allow the driver to access kobject uevents

allow hal_evs_driver self:netlink_kobject_uevent_socket create_socket_perms_no_ioctl;

+

+# Allow the driver to use the binder device

+allow hal_evs_driver binder_device:chr_file rw_file_perms;