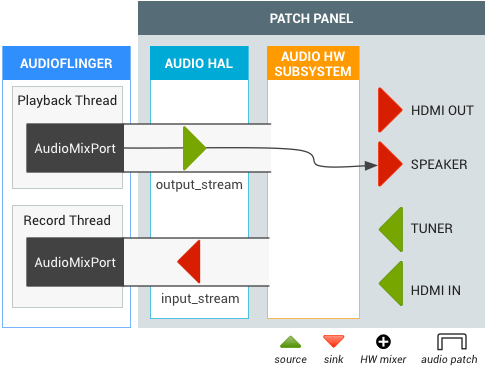

TV Input Framework (TIF) 管理器與音頻路由 API 一起使用,以支持靈活的音頻路徑更改。當片上系統 (SoC) 實現電視硬件抽象層 (HAL) 時,每個電視輸入(HDMI IN、調諧器等)都會提供TvInputHardwareInfo ,用於指定音頻類型和地址的 AudioPort 信息。

- 物理音頻輸入/輸出設備具有相應的 AudioPort。

- 軟件音頻輸出/輸入流表示為 AudioMixPort(AudioPort 的子類)。

然後 TIF 將 AudioPort 信息用於音頻路由 API。

圖 1. TV 輸入框架 (TIF)

要求

SoC 必須使用以下音頻路由 API 支持來實現音頻 HAL:

| 音頻端口 |

|

|---|---|

| 默認輸入 | AudioRecord(使用 DEFAULT 輸入源創建)必須為 Android TV 上的 AUDIO_DEVICE_IN_DEFAULT 獲取獲取虛擬空輸入源。 |

| 設備環回 | 需要支持 AUDIO_DEVICE_IN_LOOPBACK 輸入,該輸入是所有電視輸出(11Khz、16 位單聲道或 48Khz、16 位單聲道)的所有音頻輸出的完整混合。僅用於音頻捕獲。 |

電視音頻設備

Android 支持以下音頻設備用於電視音頻輸入/輸出。

system/media/audio/include/system/audio.h

注意:在 Android 5.1 及更早版本中,該文件的路徑為: system/core/include/system/audio.h

/* output devices */ AUDIO_DEVICE_OUT_AUX_DIGITAL = 0x400, AUDIO_DEVICE_OUT_HDMI = AUDIO_DEVICE_OUT_AUX_DIGITAL, /* HDMI Audio Return Channel */ AUDIO_DEVICE_OUT_HDMI_ARC = 0x40000, /* S/PDIF out */ AUDIO_DEVICE_OUT_SPDIF = 0x80000, /* input devices */ AUDIO_DEVICE_IN_AUX_DIGITAL = AUDIO_DEVICE_BIT_IN | 0x20, AUDIO_DEVICE_IN_HDMI = AUDIO_DEVICE_IN_AUX_DIGITAL, /* TV tuner input */ AUDIO_DEVICE_IN_TV_TUNER = AUDIO_DEVICE_BIT_IN | 0x4000, /* S/PDIF in */ AUDIO_DEVICE_IN_SPDIF = AUDIO_DEVICE_BIT_IN | 0x10000, AUDIO_DEVICE_IN_LOOPBACK = AUDIO_DEVICE_BIT_IN | 0x40000,

音頻 HAL 擴展

音頻路由 API 的音頻 HAL 擴展定義如下:

system/media/audio/include/system/audio.h

注意:在 Android 5.1 及更早版本中,該文件的路徑為: system/core/include/system/audio.h

/* audio port configuration structure used to specify a particular configuration of an audio port */

struct audio_port_config {

audio_port_handle_t id; /* port unique ID */

audio_port_role_t role; /* sink or source */

audio_port_type_t type; /* device, mix ... */

unsigned int config_mask; /* e.g AUDIO_PORT_CONFIG_ALL */

unsigned int sample_rate; /* sampling rate in Hz */

audio_channel_mask_t channel_mask; /* channel mask if applicable */

audio_format_t format; /* format if applicable */

struct audio_gain_config gain; /* gain to apply if applicable */

union {

struct audio_port_config_device_ext device; /* device specific info */

struct audio_port_config_mix_ext mix; /* mix specific info */

struct audio_port_config_session_ext session; /* session specific info */

} ext;

};

struct audio_port {

audio_port_handle_t id; /* port unique ID */

audio_port_role_t role; /* sink or source */

audio_port_type_t type; /* device, mix ... */

unsigned int num_sample_rates; /* number of sampling rates in following array */

unsigned int sample_rates[AUDIO_PORT_MAX_SAMPLING_RATES];

unsigned int num_channel_masks; /* number of channel masks in following array */

audio_channel_mask_t channel_masks[AUDIO_PORT_MAX_CHANNEL_MASKS];

unsigned int num_formats; /* number of formats in following array */

audio_format_t formats[AUDIO_PORT_MAX_FORMATS];

unsigned int num_gains; /* number of gains in following array */

struct audio_gain gains[AUDIO_PORT_MAX_GAINS];

struct audio_port_config active_config; /* current audio port configuration */

union {

struct audio_port_device_ext device;

struct audio_port_mix_ext mix;

struct audio_port_session_ext session;

} ext;

};

hardware/libhardware/include/hardware/audio.h

struct audio_hw_device {

:

/**

* Routing control

*/

/* Creates an audio patch between several source and sink ports.

* The handle is allocated by the HAL and should be unique for this

* audio HAL module. */

int (*create_audio_patch)(struct audio_hw_device *dev,

unsigned int num_sources,

const struct audio_port_config *sources,

unsigned int num_sinks,

const struct audio_port_config *sinks,

audio_patch_handle_t *handle);

/* Release an audio patch */

int (*release_audio_patch)(struct audio_hw_device *dev,

audio_patch_handle_t handle);

/* Fills the list of supported attributes for a given audio port.

* As input, "port" contains the information (type, role, address etc...)

* needed by the HAL to identify the port.

* As output, "port" contains possible attributes (sampling rates, formats,

* channel masks, gain controllers...) for this port.

*/

int (*get_audio_port)(struct audio_hw_device *dev,

struct audio_port *port);

/* Set audio port configuration */

int (*set_audio_port_config)(struct audio_hw_device *dev,

const struct audio_port_config *config);

測試 DEVICE_IN_LOOPBACK

要測試 DEVICE_IN_LOOPBACK 以進行電視監控,請使用以下測試代碼。運行測試後,捕獲的音頻保存到/sdcard/record_loopback.raw ,您可以在其中使用FFmpeg收聽。

<uses-permission android:name="android.permission.MODIFY_AUDIO_ROUTING" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

AudioRecord mRecorder;

Handler mHandler = new Handler();

int mMinBufferSize = AudioRecord.getMinBufferSize(RECORD_SAMPLING_RATE,

AudioFormat.CHANNEL_IN_MONO,

AudioFormat.ENCODING_PCM_16BIT);;

static final int RECORD_SAMPLING_RATE = 48000;

public void doCapture() {

mRecorder = new AudioRecord(MediaRecorder.AudioSource.DEFAULT, RECORD_SAMPLING_RATE,

AudioFormat.CHANNEL_IN_MONO, AudioFormat.ENCODING_PCM_16BIT, mMinBufferSize * 10);

AudioManager am = (AudioManager) getSystemService(Context.AUDIO_SERVICE);

ArrayList<AudioPort> audioPorts = new ArrayList<AudioPort>();

am.listAudioPorts(audioPorts);

AudioPortConfig srcPortConfig = null;

AudioPortConfig sinkPortConfig = null;

for (AudioPort audioPort : audioPorts) {

if (srcPortConfig == null

&& audioPort.role() == AudioPort.ROLE_SOURCE

&& audioPort instanceof AudioDevicePort) {

AudioDevicePort audioDevicePort = (AudioDevicePort) audioPort;

if (audioDevicePort.type() == AudioManager.DEVICE_IN_LOOPBACK) {

srcPortConfig = audioPort.buildConfig(48000, AudioFormat.CHANNEL_IN_DEFAULT,

AudioFormat.ENCODING_DEFAULT, null);

Log.d(LOG_TAG, "Found loopback audio source port : " + audioPort);

}

}

else if (sinkPortConfig == null

&& audioPort.role() == AudioPort.ROLE_SINK

&& audioPort instanceof AudioMixPort) {

sinkPortConfig = audioPort.buildConfig(48000, AudioFormat.CHANNEL_OUT_DEFAULT,

AudioFormat.ENCODING_DEFAULT, null);

Log.d(LOG_TAG, "Found recorder audio mix port : " + audioPort);

}

}

if (srcPortConfig != null && sinkPortConfig != null) {

AudioPatch[] patches = new AudioPatch[] { null };

int status = am.createAudioPatch(

patches,

new AudioPortConfig[] { srcPortConfig },

new AudioPortConfig[] { sinkPortConfig });

Log.d(LOG_TAG, "Result of createAudioPatch(): " + status);

}

mRecorder.startRecording();

processAudioData();

mRecorder.stop();

mRecorder.release();

}

private void processAudioData() {

OutputStream rawFileStream = null;

byte data[] = new byte[mMinBufferSize];

try {

rawFileStream = new BufferedOutputStream(

new FileOutputStream(new File("/sdcard/record_loopback.raw")));

} catch (FileNotFoundException e) {

Log.d(LOG_TAG, "Can't open file.", e);

}

long startTimeMs = System.currentTimeMillis();

while (System.currentTimeMillis() - startTimeMs < 5000) {

int nbytes = mRecorder.read(data, 0, mMinBufferSize);

if (nbytes <= 0) {

continue;

}

try {

rawFileStream.write(data);

} catch (IOException e) {

Log.e(LOG_TAG, "Error on writing raw file.", e);

}

}

try {

rawFileStream.close();

} catch (IOException e) {

}

Log.d(LOG_TAG, "Exit audio recording.");

}

在/sdcard/record_loopback.raw中找到捕獲的音頻文件並使用FFmpeg收聽:

adb pull /sdcard/record_loopback.rawffmpeg -f s16le -ar 48k -ac 1 -i record_loopback.raw record_loopback.wavffplay record_loopback.wav

用例

本節包括電視音頻的常見用例。

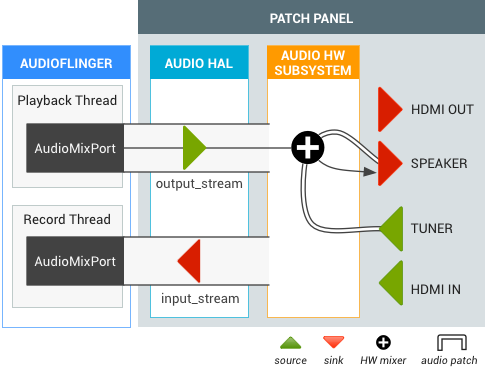

帶揚聲器輸出的電視調諧器

當電視調諧器激活時,音頻路由 API 在調諧器和默認輸出(例如揚聲器)之間創建一個音頻補丁。調諧器輸出不需要解碼,但最終音頻輸出與軟件 output_stream 混合。

圖 2.帶有揚聲器輸出的電視調諧器音頻補丁。

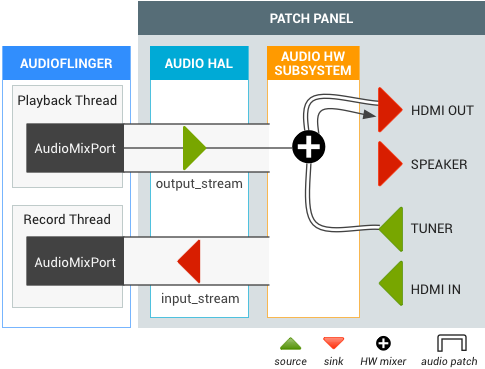

直播電視期間的 HDMI OUT

用戶正在觀看直播電視,然後切換到 HDMI 音頻輸出 (Intent.ACTION_HDMI_AUDIO_PLUG) 。所有 output_streams 的輸出設備更改為 HDMI_OUT 端口,TIF 管理器將現有調諧器音頻補丁的 sink 端口更改為 HDMI_OUT 端口。

圖 3.來自直播電視的 HDMI OUT 音頻補丁。