Multimedia tunneling enables compressed video data to tunnel through a hardware video decoder directly to a display, without being processed by app code or Android framework code. The device-specific code below the Android stack determines which video frames to send to the display and when to send them by comparing the video frame presentation timestamps with one of the following types of internal clock:

For on-demand video playback in Android 5 or higher, an

AudioTrackclock synchronized to audio presentation timestamps passed in by the appFor live broadcast playback in Android 11 or higher, a program reference clock (PCR) or system time clock (STC) driven by a tuner

Background

Traditional video playback on Android notifies

the app when a compressed video frame has been decoded. The app then

releases

the decoded video frame to the display to be rendered at the same system clock

time as the corresponding audio frame,

retrieving historical

AudioTimestamps

instances to calculate the correct timing.

Because tunneled video playback bypasses the app code and reduces the number of processes acting on the video, it can provide more efficient video rendering depending on the OEM implementation. It also can provide more accurate video cadence and synchronization to the chosen clock (PRC, STC, or audio) by avoiding timing issues introduced by potential skew between the timing of Android requests to render video, and the timing of true hardware vsyncs. However, tunneling can also reduce support for GPU effects such as blurring or rounded corners in picture-in-picture (PiP) windows, because the buffers bypass the Android graphics stack.

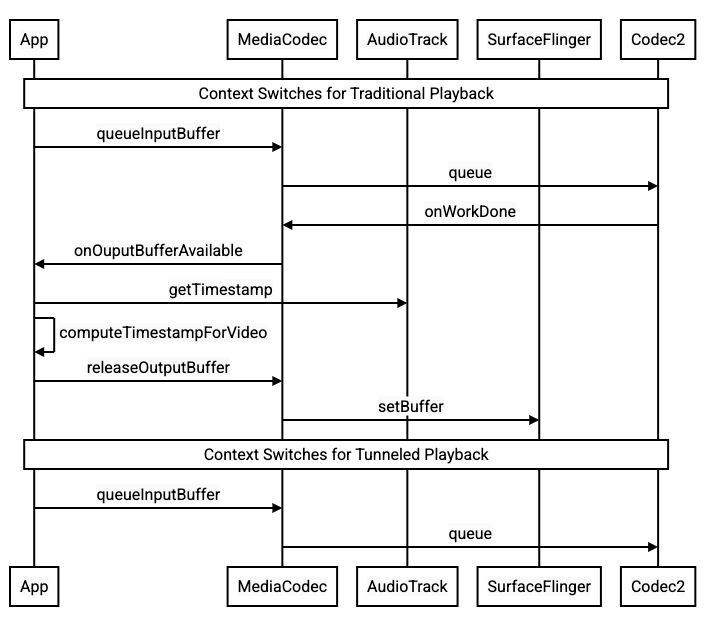

The following diagram shows how tunneling simplifies the video playback process.

Figure 1. Comparison of traditional and tunneled video playback processes

For app developers

Because most app developers integrate with a library for playback implementation, in most cases implementation requires only reconfiguring that library for tunneled playback. For low-level implementation of a tunneled video player, use the following instructions.

For on-demand video playback in Android 5 or higher:

Create a

SurfaceViewinstance.Create an

audioSessionIdinstance.Create

AudioTrackandMediaCodecinstances with theaudioSessionIdinstance created in step 2.Queue audio data to

AudioTrackwith the presentation timestamp for the first audio frame in the audio data.

For live broadcast playback in Android 11 or higher:

Create a

SurfaceViewinstance.Get an

avSyncHwIdinstance fromTuner.Create

AudioTrackandMediaCodecinstances with theavSyncHwIdinstance created in step 2.

The API call flow is shown in the following code snippets:

aab.setContentType(AudioAttributes.CONTENT_TYPE_MOVIE);

// configure for audio clock sync

aab.setFlag(AudioAttributes.FLAG_HW_AV_SYNC);

// or, for tuner clock sync (Android 11 or higher)

new tunerConfig = TunerConfiguration(0, avSyncId);

aab.setTunerConfiguration(tunerConfig);

if (codecName == null) {

return FAILURE;

}

// configure for audio clock sync

mf.setInteger(MediaFormat.KEY_AUDIO_SESSION_ID, audioSessionId);

// or, for tuner clock sync (Android 11 or higher)

mf.setInteger(MediaFormat.KEY_HARDWARE_AV_SYNC_ID, avSyncId);

Behavior of on-demand video playback

Because tunneled on-demand video playback is tied implicitly to AudioTrack

playback, the behavior of tunneled video playback might depend on the behavior

of audio playback.

On most devices, by default, a video frame isn't rendered until audio playback begins. However, the app might need to render a video frame before starting audio playback, for example, to show the user the current video position while seeking.

To signal that the first queued video frame should be rendered as soon as it's decoded, set the

PARAMETER_KEY_TUNNEL_PEEKparameter to1. When compressed video frames are reordered in the queue (such as when B-frames are present), this means the first displayed video frame should always be an I-frame.If you don't want the first queued video frame to be rendered until audio playback begins, set this parameter to

0.If this parameter isn't set, the OEM determines the behavior for the device.

When audio data isn't provided to

AudioTrackand the buffers are empty (audio underrun), video playback stalls until more audio data is written because the audio clock is no longer advancing.During playback, discontinuities the app can't correct for might appear in audio presentation timestamps. When this happens, the OEM corrects negative gaps by stalling the current video frame, and positive gaps by either dropping video frames or inserting silent audio frames (depending on the OEM implementation). The

AudioTimestampframe position doesn't increase for inserted silent audio frames.

For device manufacturers

Configuration

OEMs should create a separate video decoder to support tunneled video playback.

This decoder should advertise that it's capable of tunneled playback in the

media_codecs.xml file:

<Feature name="tunneled-playback" required="true"/>

When a tunneled MediaCodec instance is configured with an audio session ID, it

queries AudioFlinger for this HW_AV_SYNC ID:

if (entry.getKey().equals(MediaFormat.KEY_AUDIO_SESSION_ID)) {

int sessionId = 0;

try {

sessionId = (Integer)entry.getValue();

}

catch (Exception e) {

throw new IllegalArgumentException("Wrong Session ID Parameter!");

}

keys[i] = "audio-hw-sync";

values[i] = AudioSystem.getAudioHwSyncForSession(sessionId);

}

During this query,

AudioFlinger retrieves the HW_AV_SYNC ID

from the primary audio device and internally associates it with the audio

session ID:

audio_hw_device_t *dev = mPrimaryHardwareDev->hwDevice();

char *reply = dev->get_parameters(dev, AUDIO_PARAMETER_HW_AV_SYNC);

AudioParameter param = AudioParameter(String8(reply));

int hwAVSyncId;

param.getInt(String8(AUDIO_PARAMETER_HW_AV_SYNC), hwAVSyncId);

If an AudioTrack instance has already been created, the HW_AV_SYNC ID is

passed to the output stream with the same audio session ID. If it hasn't been

created yet, then the HW_AV_SYNC ID is passed to the output stream during

AudioTrack creation. This is done by the playback

thread:

mOutput->stream->common.set_parameters(&mOutput->stream->common, AUDIO_PARAMETER_STREAM_HW_AV_SYNC, hwAVSyncId);

The HW_AV_SYNC ID, whether it corresponds to an audio output stream or a

Tuner configuration, is passed into the OMX or Codec2 component so that the

OEM code can associate the codec with the corresponding audio output stream or

the tuner stream.

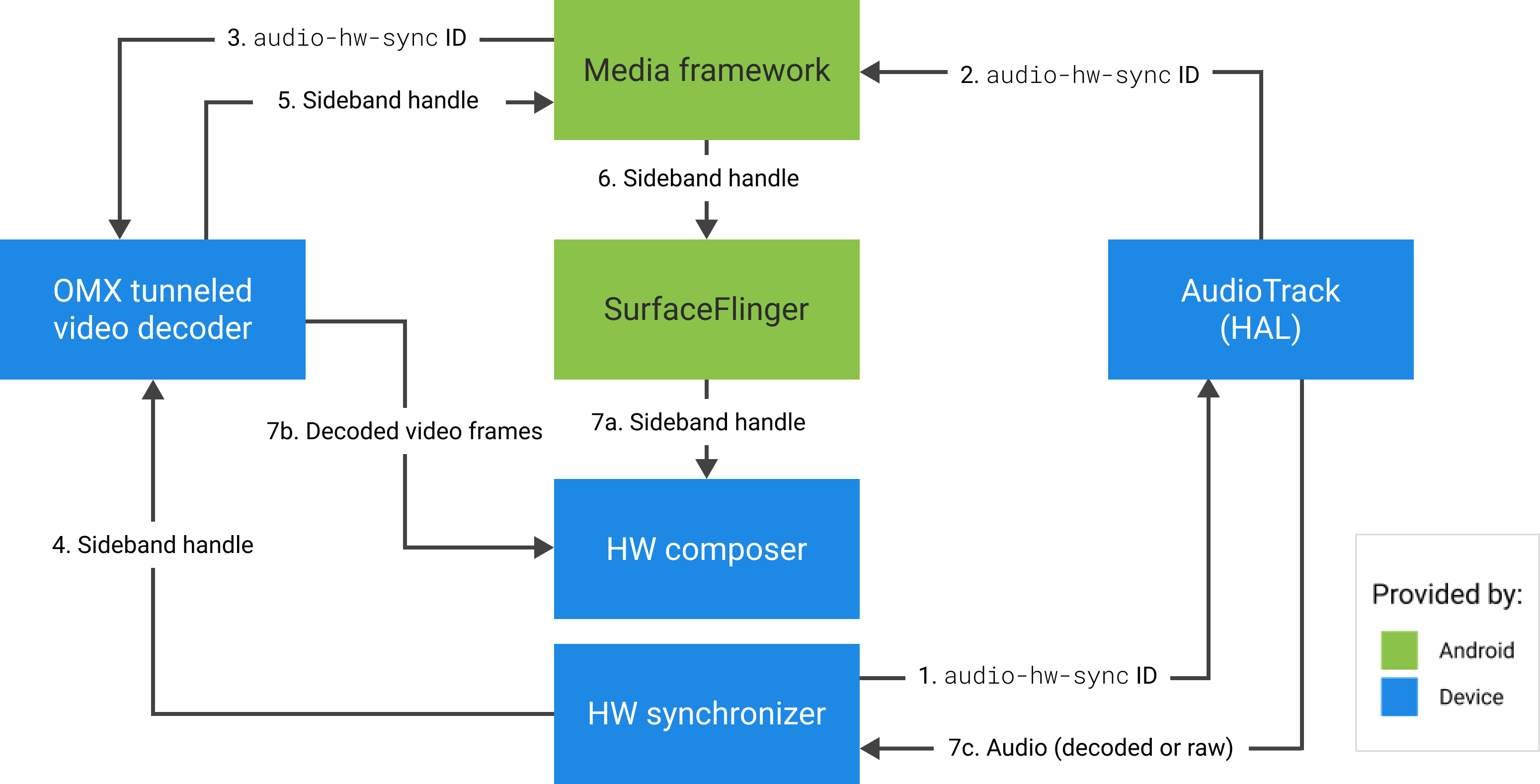

During component configuration, the OMX or Codec2 component should return a

sideband handle that can be used to associate the codec with a Hardware Composer

(HWC) layer. When the app associates a surface with MediaCodec, this sideband

handle is passed down to HWC through SurfaceFlinger, which configures the

layer as a

sideband layer.

err = native_window_set_sideband_stream(nativeWindow.get(), sidebandHandle);

if (err != OK) {

ALOGE("native_window_set_sideband_stream(%p) failed! (err %d).", sidebandHandle, err);

return err;

}

HWC is responsible for receiving new image buffers from the codec output at the appropriate time, either synchronized to the associated audio output stream or the tuner program reference clock, compositing the buffers with the current contents of other layers, and displaying the resulting image. This happens independently of the normal prepare and set cycle. The prepare and set calls happen only when other layers change, or when properties of the sideband layer (such as position or size) change.

OMX

A tunneled decoder component should support the following:

Setting the

OMX.google.android.index.configureVideoTunnelModeextended parameter, which uses theConfigureVideoTunnelModeParamsstructure to pass in theHW_AV_SYNCID associated with the audio output device.Configuring the

OMX_IndexConfigAndroidTunnelPeekparameter that tells the codec to render or to not render the first decoded video frame, regardless of whether audio playback has started.Sending the

OMX_EventOnFirstTunnelFrameReadyevent when the first tunneled video frame has been decoded and is ready to be rendered.

The AOSP implementation configures tunnel mode in

ACodec

through

OMXNodeInstance

as shown in the following code snippet:

OMX_INDEXTYPE index;

OMX_STRING name = const_cast<OMX_STRING>(

"OMX.google.android.index.configureVideoTunnelMode");

OMX_ERRORTYPE err = OMX_GetExtensionIndex(mHandle, name, &index);

ConfigureVideoTunnelModeParams tunnelParams;

InitOMXParams(&tunnelParams);

tunnelParams.nPortIndex = portIndex;

tunnelParams.bTunneled = tunneled;

tunnelParams.nAudioHwSync = audioHwSync;

err = OMX_SetParameter(mHandle, index, &tunnelParams);

err = OMX_GetParameter(mHandle, index, &tunnelParams);

sidebandHandle = (native_handle_t*)tunnelParams.pSidebandWindow;

If the component supports this configuration, it should allocate a sideband

handle to this codec and pass it back through the pSidebandWindow member so

that the HWC can identify the associated codec. If the component doesn't

support this configuration, it should set bTunneled to OMX_FALSE.

Codec2

In Android 11 or higher, Codec2 supports tunneled playback. The decoder

component should support the following:

Configuring

C2PortTunneledModeTuning, which configures tunnel mode and passes in theHW_AV_SYNCretrieved from either the audio output device or the tuner configuration.Querying

C2_PARAMKEY_OUTPUT_TUNNEL_HANDLE, to allocate and retrieve the sideband handle for HWC.Handling

C2_PARAMKEY_TUNNEL_HOLD_RENDERwhen attached to aC2Work, which instructs the codec to decode and signal work completion, but not to render the output buffer until either 1) the codec is later instructed to render it or 2) audio playback begins.Handling

C2_PARAMKEY_TUNNEL_START_RENDER, which instructs the codec to immediately render the frame that was marked withC2_PARAMKEY_TUNNEL_HOLD_RENDER, even if audio playback hasn't started.Leave

debug.stagefright.ccodec_delayed_paramsunconfigured (recommended). If you do configure it, set tofalse.

The AOSP implementation configures tunnel mode in

CCodec

through C2PortTunnelModeTuning, as shown in the following code snippet:

if (msg->findInt32("audio-hw-sync", &tunneledPlayback->m.syncId[0])) {

tunneledPlayback->m.syncType =

C2PortTunneledModeTuning::Struct::sync_type_t::AUDIO_HW_SYNC;

} else if (msg->findInt32("hw-av-sync-id", &tunneledPlayback->m.syncId[0])) {

tunneledPlayback->m.syncType =

C2PortTunneledModeTuning::Struct::sync_type_t::HW_AV_SYNC;

} else {

tunneledPlayback->m.syncType =

C2PortTunneledModeTuning::Struct::sync_type_t::REALTIME;

tunneledPlayback->setFlexCount(0);

}

c2_status_t c2err = comp->config({ tunneledPlayback.get() }, C2_MAY_BLOCK,

failures);

std::vector<std::unique_ptr<C2Param>> params;

c2err = comp->query({}, {C2PortTunnelHandleTuning::output::PARAM_TYPE},

C2_DONT_BLOCK, ¶ms);

if (c2err == C2_OK && params.size() == 1u) {

C2PortTunnelHandleTuning::output *videoTunnelSideband =

C2PortTunnelHandleTuning::output::From(params[0].get());

return OK;

}

If the component supports this configuration, it should allocate a sideband

handle to this codec and pass it back through C2PortTunnelHandlingTuning so

that the HWC can identify the associated codec.

Audio HAL

For on-demand video playback, the Audio HAL receives the audio presentation timestamps inline with the audio data in big-endian format inside a header found at the beginning of each block of audio data the app writes:

struct TunnelModeSyncHeader {

// The 32-bit data to identify the sync header (0x55550002)

int32 syncWord;

// The size of the audio data following the sync header before the next sync

// header might be found.

int32 sizeInBytes;

// The presentation timestamp of the first audio sample following the sync

// header.

int64 presentationTimestamp;

// The number of bytes to skip after the beginning of the sync header to find the

// first audio sample (20 bytes for compressed audio, or larger for PCM, aligned

// to the channel count and sample size).

int32 offset;

}

For HWC to render video frames in sync with the corresponding audio frames, the Audio HAL should parse the sync header and use the presentation timestamp to resynchronize the playback clock with audio rendering. To resynchronize when compressed audio is being played, the Audio HAL might need to parse metadata inside the compressed audio data to determine its playback duration.

Pause support

Android 5 or lower doesn't include pause support. You can pause tunneled playback only by A/V starvation, but if the internal buffer for video is large (for example, there's one second of data in the OMX component), it makes pause look nonresponsive.

In Android 5.1 or higher, AudioFlinger supports pause and resume for direct

(tunneled) audio outputs. If the HAL implements pause and resume, track pause

and resume is forwarded to the HAL.

The pause, flush, resume call sequence is respected by executing the HAL calls in the playback thread (same as offload).

Implementation suggestions

Audio HAL

For Android 11, the HW sync ID from PCR or STC can be used for A/V sync, so video-only stream is supported.

For Android 10 or lower, devices supporting tunneled video playback should have

at least one audio output stream profile with the FLAG_HW_AV_SYNC and

AUDIO_OUTPUT_FLAG_DIRECT flags in its audio_policy.conf file. These flags

are used to set the system clock from the audio clock.

OMX

Device manufacturers should have a separate OMX component for tunneled video playback (manufacturers can have additional OMX components for other types of audio and video playback, such as secure playback). The tunneled component should:

Specify 0 buffers (

nBufferCountMin,nBufferCountActual) on its output port.Implement the

OMX.google.android.index.prepareForAdaptivePlayback setParameterextension.Specify its capabilities in the

media_codecs.xmlfile and declare the tunneled playback feature. It should also clarify any limitations on frame size, alignment, or bitrate. An example is shown below:<MediaCodec name="OMX.OEM_NAME.VIDEO.DECODER.AVC.tunneled" type="video/avc" > <Feature name="adaptive-playback" /> <Feature name="tunneled-playback" required=”true” /> <Limit name="size" min="32x32" max="3840x2160" /> <Limit name="alignment" value="2x2" /> <Limit name="bitrate" range="1-20000000" /> ... </MediaCodec>

If the same OMX component is used to support tunneled and nontunneled decoding, it should leave the tunneled playback feature as non-required. Both tunneled and nontunneled decoders then have the same capability limitations. An example is shown below:

<MediaCodec name="OMX._OEM\_NAME_.VIDEO.DECODER.AVC" type="video/avc" >

<Feature name="adaptive-playback" />

<Feature name="tunneled-playback" />

<Limit name="size" min="32x32" max="3840x2160" />

<Limit name="alignment" value="2x2" />

<Limit name="bitrate" range="1-20000000" />

...

</MediaCodec>

Hardware Composer (HWC)

When there's a tunneled layer (a layer with HWC_SIDEBAND compositionType) on

a display, the layer’s sidebandStream is the sideband handle allocated by the

OMX video component.

The HWC synchronizes decoded video frames (from the tunneled OMX component) to

the associated audio track (with the audio-hw-sync ID). When a new video frame

becomes current, the HWC composites it with the current contents of all layers

received during the last prepare or set call, and displays the resulting image.

The prepare or set calls happen only when other layers change, or when

properties of the sideband layer (such as position or size) change.

The following figure represents the HWC working with the hardware (or kernel or driver) synchronizer, to combine video frames (7b) with the latest composition (7a) for display at the correct time, based on audio (7c).

Figure 2. HWC hardware (or kernel or driver) synchronizer