The CTS test results are placed in the file:

CTS_ROOT/android-cts/results/start_time.zip

If you have built the CTS yourself, CTS_ROOT resembles

out/host/linux-x86/cts but differs by platform. This reflects the path where

you have uncompressed the prebuilt official CTS

downloaded from this site.

Inside the zip, the test_result.xml file contains the actual results.

Display Android 10 and later results

A test_result.html file exists within the zip archive, you can directly open it in any HTML5-compatible web browser

Display Pre-Android 10 results

Open test_result.xml file in any HTML5-compatible web browser to view the test results

If this file displays a blank page when using the Chrome browser,

change your browser configuration

to enable the --allow-file-access-from-files command line flag.

Read the test results

The details of the test results depend on which version of CTS you are using:

- CTS v1 for Android 6.0 and earlier

- CTS v2 for Android 7.0 and later

Device information

In CTS v1 and earlier, select Device Information (link above Test Summary) to view details about the device, firmware (make, model, firmware build, platform), and device hardware (screen resolution, keypad, screen type). CTS v2 does not display device information.

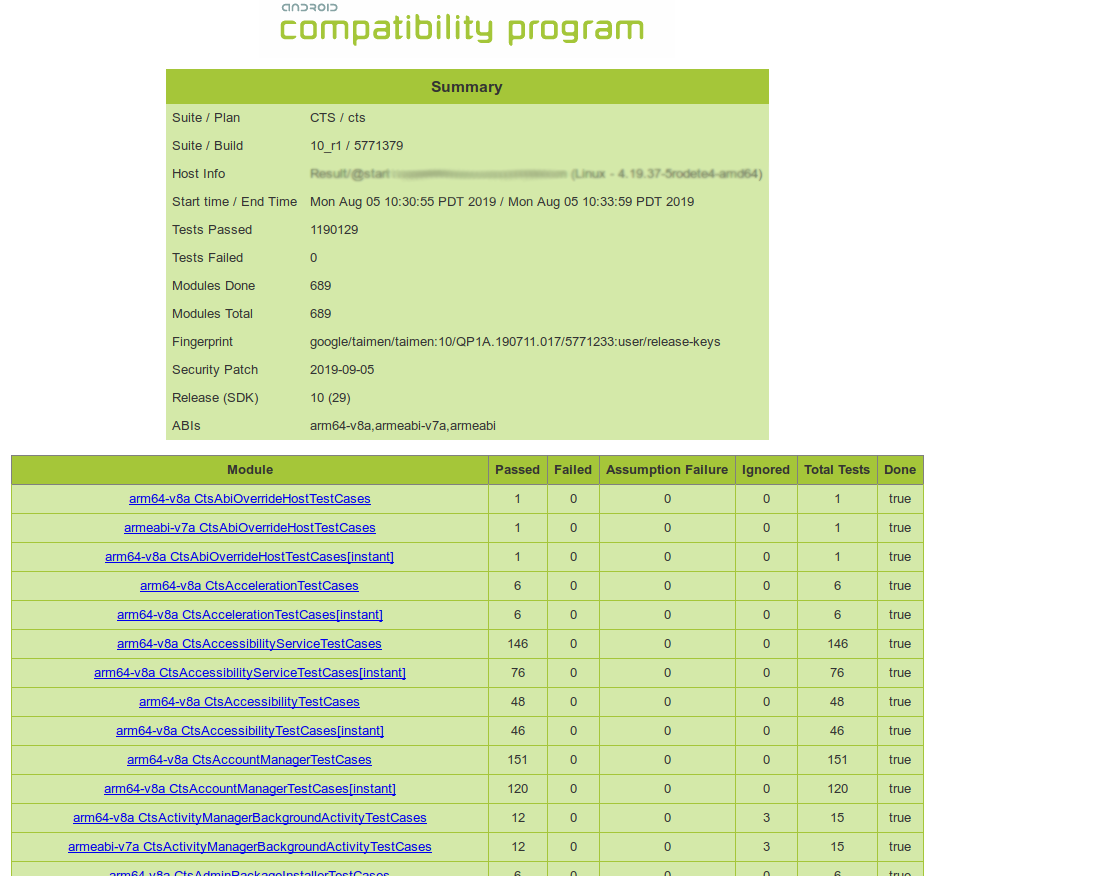

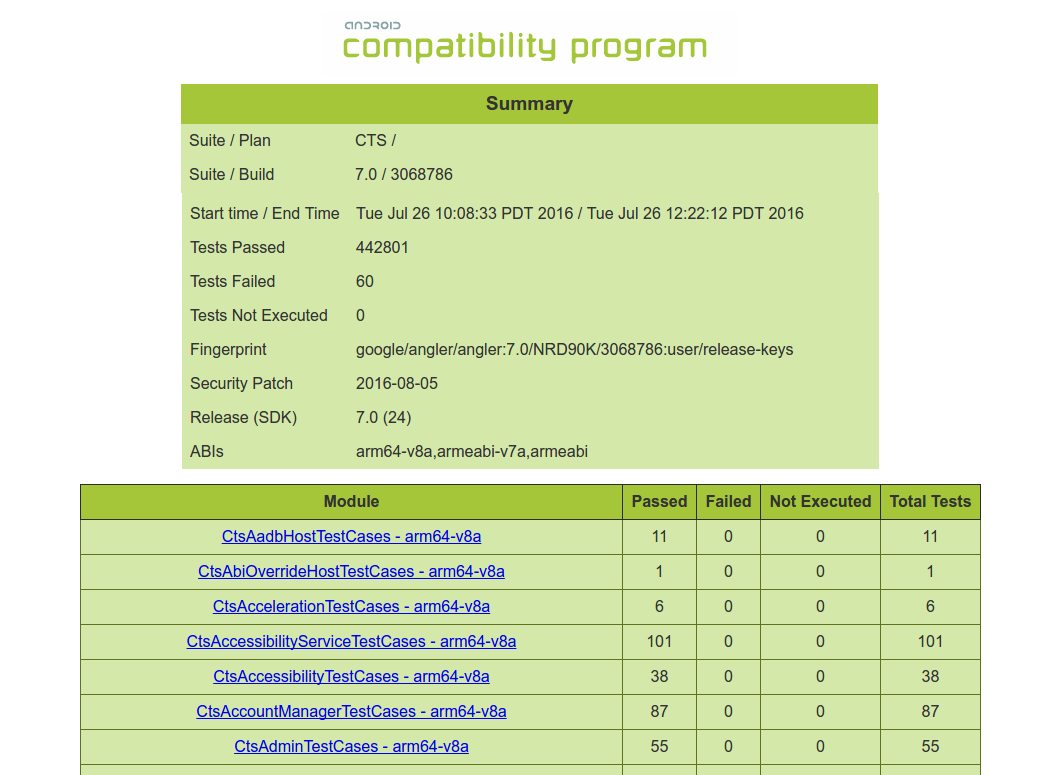

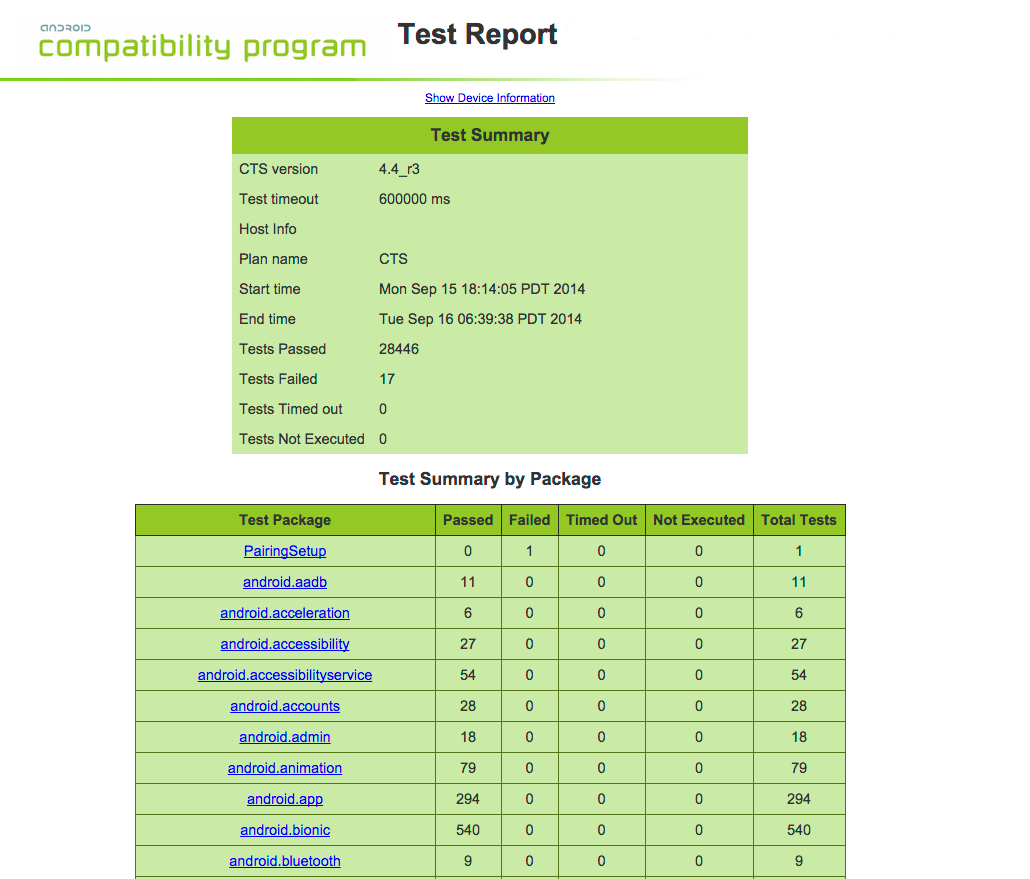

Test summary

The Test Summary section provides executed test plan details, such as the CTS plan name and execution start and end times. It also presents an aggregate summary of the number of tests that passed, failed, timed out, or could not be executed.

Android 10 CTS sample test summary

Figure 1: Android 10 CTS sample test summary

CTS v2 sample test summary

Figure 2: CTS v2 sample test summary

CTS v1 sample test summary

Figure 3: CTS v1 sample test summary

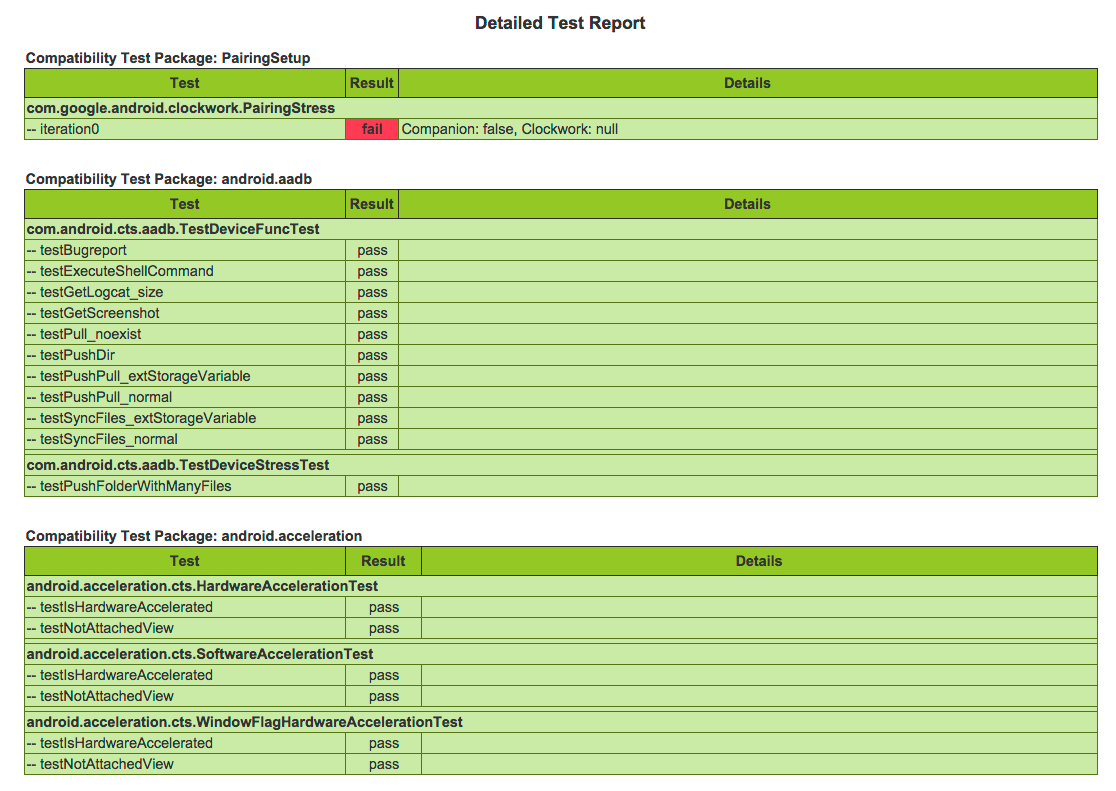

Test report

The next section, the CTS test report, provides a summary of tests passed per package.

This is followed by details of the actual tests that were executed. The report lists the test package, test suite, test case, and the executed tests. It shows the result of the test execution—pass, fail, timed out, or not executed. In the event of a test failure details are provided to help diagnose the cause.

Further, the stack trace of the failure is available in the XML file but is not included in the report to ensure brevity—viewing the XML file with a text editor should provide details of the test failure (search for the [Test] tag corresponding to the failed test and look within it for the [StackTrace] tag).

Show CTS v2 sample test report

Figure 4: CTS v2 sample test report

Show CTS v1 sample test report

Figure 5: CTS v1 sample test report

Review test_result.xml for incomplete test modules

To determine the number of incomplete modules in a given test session, run command 'list results'. The count of Modules Completed and Total Modules are listed for each previous session. To determine which modules are complete vs. incomplete, open the test_result.xml file and read the value of the "done" attribute for each module in the result report. Modules with value done = "false" have not run to completion.

Triage test failures

Use the following suggestions to triage test failures.

- Verify your CTS environment is set up correctly, if a test is failing due to incorrect preconditions. This includes the physical environment, desktop machine setup, and Android device setup.

- Verify device stability, test setup, or environment problems, if a test is appearing excessively flaky.

- Retry the test in isolation if still failing.

- Check for external factors causing test failures, such as:

- Environmental setup. For example, a misconfigured desktop machine setup may be the cause of test failures occurring on all Device-Under- Test (DUTs) (including reference devices).

- External dependencies. For example, if a test fails on all devices in multiple sites starting at a specific point in time, a bad URL might be at fault.

- If DUT does not include the security patch, its security test failure is expected.

- Validate and analyze the differences between passing and failing devices.

- Analyze the assertion, log, bugreport, and the CTS source. For a HostTest, assertion and log can be very generic so it is helpful to also check and attach device logcat.

- Submit a test improvement patch to help reducing test failures.

Save partial results

Tradefed doesn't save partial test results when the test invocation fails.

When Tradefed is not generating any test results, it's implied that a serious issue has occurred during the test run, thus making the test result untrustworthy. The partial result is deemed unhelpful as it doesn't provide value when investigating device issue.