A number of Camera ITS changes are included in the Android 12 release. This page summarizes the changes which fall into four broad categories:

Refactor to Python 3

Due to the Python 2.7 deprecation in January 2020, the entire Camera ITS codebase was refactored to Python 3. The following Python versions and libraries are required in Android 12:

- Python 3.7.9 or Python 3.7.10

- OpenCV 3.4.2

- Numpy 1.19.2

- Matplotlib 3.3.2

- Scipy 1.5.2

- pySerial 3.5

- Pillow 8.1.0

- PyYAML 5.3.1

The main test launcher, tools/run_all_tests.py, remains the same as versions

Android 11 or lower and is refactored to Python 3.

All individual tests are refactored and use the new test setup class defined in

tests/its_base_test.py. Most test names and functionality remain the same.

In Android 12 all individual tests now load their

scenes. While scene loading for each test

increases overall test time, it enables debugging of individual tests.

For more information on individual test changes, see Test changes.

The following Python modules are refactored with a name change:

pymodules/its/caps.py→utils/camera_properties_utils.pypymodules/its/cv2image.py→utils/opencv_processing_utils.pypymodules/its/device.py→utils/its_session_utils.pypymodules/its/error.py→utils/error_util.pypymodules/its/image.py→utils/image_processing_utils.pypymodules/its/objects.py→utils/capture_request_utils.pypymodules/its/target.py→utils/target_exposure_utils.pytools/hw.py→utils/sensor_fusion_utils.py

Mobly test framework adoption

Mobly is a Python-based test framework supporting test cases that require multiple devices with custom hardware setups. Camera ITS uses the Mobly test infrastructure to enable better control and logging of the tests.

Camera ITS uses the Mobly test infrastructure to enable better control and logging of the tests. Mobly is a Python-based test framework supporting test cases that require multiple devices with custom hardware setups. For more information on Mobly, see google/mobly.

config.yml files

With the Mobly framework, you can set up a device under test (DUT) and a chart

tablet in the its_base_test class. A config.yml (YAML) file is used to

create a Mobly testbed. Multiple testbeds can be configured within this config

file, for example, a tablet and a sensor fusion testbed. Within each testbed's

controller section, you can specify device_ids to

identify the appropriate Android devices to the test runner. In addition to the

device IDs, other parameters such as tablet brightness, chart_distance,

debug_mode, camera_id, and scene_id are passed in the test class. Common

test parameter values are:

brightness: 192 (all tablets except Pixel C)

chart_distance: 31.0 (rev1/rev1a box for FoV < 90° cameras)

chart_distance: 22.0 (rev2 test rig for FoV > 90° cameras)

Tablet-based testing

For tablet-based testing, the keyword TABLET must be present in the testbed

name. During initialization, the Mobly test runner initializes TestParams

and passes them to the individual tests.

The following is a sample config.yml file for tablet-based runs.

TestBeds:

- Name: TEST_BED_TABLET_SCENES

# Test configuration for scenes[0:4, 6, _change]

Controllers:

AndroidDevice:

- serial: 8A9X0NS5Z

label: dut

- serial: 5B16001229

label: tablet

TestParams:

brightness: 192

chart_distance: 22.0

debug_mode: "False"

chart_loc_arg: ""

camera: 0

scene: <scene-name> # if <scene-name> runs all scenes

The testbed can be invoked using tools/run_all_tests.py. If no command line

values are present, the tests run with the config.yml file values.

Additionally, you can override the camera and scene config file values at

the command line using commands similar to Android 11

or lower.

For example:

python tools/run_all_tests.py

python tools/run_all_tests.py camera=1

python tools/run_all_tests.py scenes=2,1,0

python tools/run_all_tests.py camera=1 scenes=2,1,0

Sensor fusion testing

For sensor fusion testing,

the testbed name must include the keyword

SENSOR_FUSION. The correct testbed is determined by the

scenes tested. Android 12 supports both Arduino

and Canakit

controllers for sensor fusion.

The following is a sample config.yml file for sensor fusion runs.

Testbeds

- Name: TEST_BED_SENSOR_FUSION

# Test configuration for sensor_fusion/test_sensor_fusion.py

Controllers:

AndroidDevice:

- serial: 8A9X0NS5Z

label: dut

TestParams:

fps: 30

img_size: 640,480

test_length: 7

debug_mode: "False"

chart_distance: 25

rotator_cntl: arduino # cntl can be arduino or canakit

rotator_ch: 1

camera: 0

To run sensor fusion tests with the sensor fusion test rig, use:

python tools/run_all_tests.py scenes=sensor_fusion

python tools/run_all_tests.py scenes=sensor_fusion camera=0

Multiple testbeds

Multiple testbeds can be included in the config file. The most common combination is to have both a tablet testbed and a sensor fusion testbed.

The following is a sample config.yml file with both tablet and sensor fusion

testbeds.

Testbeds

- Name: TEST_BED_TABLET_SCENES

# Test configuration for scenes[0:4, 6, _change]

Controllers:

AndroidDevice:

- serial: 8A9X0NS5Z

label: dut

- serial: 5B16001229

label: tablet

TestParams:

brightness: 192

chart_distance: 22.0

debug_mode: "False"

chart_loc_arg: ""

camera: 0

scene: <scene-name> # if <scene-name> runs all scenes

- Name: TEST_BED_SENSOR_FUSION

# Test configuration for sensor_fusion/test_sensor_fusion.py

Controllers:

AndroidDevice:

- serial: 8A9X0NS5Z

label: dut

TestParams:

fps: 30

img_size: 640,480

test_length: 7

debug_mode: "False"

chart_distance: 25

rotator_cntl: arduino # cntl can be arduino or canakit

rotator_ch: 1

camera: 0

Manual testing

Manual testing continues to be supported in Android 12.

However, the testbed must identify

testing as such with the keyword MANUAL in the testbed name. Additionally, the

testbed can't include a tablet ID.

The following is a sample config.yml file for manual testing.

TestBeds:

- Name: TEST_BED_MANUAL

Controllers:

AndroidDevice:

- serial: 8A9X0NS5Z

label: dut

TestParams:

debug_mode: "False"

chart_distance: 31.0

camera: 0

scene: scene1

Test scenes without tablets

Testing for scene 0 and scene 5 can be done with TEST_BED_TABLET_SCENES or

with TEST_BED_MANUAL. However, if testing is done with

TEST_BED_TABLET_SCENES, the tablet must be connected and the tablet serial ID

must be valid even though the tablet isn't used because the test class setup

assigns the serial ID value for the tablet.

Run individual tests

Individual tests can be run only for debug purposes because their results aren't

reported to CTS Verifier. Because the

config.yml files can't be overwritten at the command line for camera and

scene, these parameters must be correct in the config.yml file for the

individual test in question. Additionally, if there is more than one testbed in

the config file, you must

specify the testbed with the --test_bed flag. For example:

python tests/scene1_1/test_black_white.py --config config.yml --test_bed TEST_BED_TABLET_SCENES

Test artifacts

In Android 12, test artifacts for Camera ITS are stored similarly to Android 11 or lower but with the following changes:

- The test artifact

/tmpdirectory hasCameraITS_prepended to the 8-character random string for clarity. - Test output and errors are stored in

test_log.DEBUGfor each test instead oftest_name_stdout.txtandtest_name_stderr.txt. - The DUT and tablet logcats from each individual test are in stored

the

/tmp/CameraITS_########directory simplifying debugging as all information required to debug 3A issues are logged.

Test changes

In Android 12 the tablet scenes are PNG files rather than PDF files. Use of PNG files enables more tablet models to display the scenes properly.

scene0/test_jitter.py

The test_jitter test runs on physical

hidden cameras in Android 12.

scene1_1/test_black_white.py

For Android 12, test_black_white has

the functionality of both test_black_white and

test_channel_saturation.

The following table describes the two individual tests in Android 11.

| Test name | First API level | Assertions |

|---|---|---|

| scene1_1/test_black_white.py | ALL | Short exposure, low gain RGB values ~[0, 0, 0] Long exposure, high gain RGB values ~[255, 255, 255] |

| scene1_1/test_channel_saturation.py | 29 | Reduced tolerance on [255, 255, 255] differences to eliminate color tint in white images. |

The following table describes the merged test, scene1_1/test_black_white.py, in Android 12.

| Test name | First API level | Assertions |

|---|---|---|

| scene1_1/test_black_white.py | ALL | Short exposure, low gain RGB values ~[0, 0, 0] Long exposure, high gain RGB values ~[255, 255, 255] and reduced tolerance between values to eliminate color tint in white images. |

scene1_1/test_burst_sameness_manual.py

The test_burst_sameness_manual test runs on physical

hidden cameras in Android 12.

scene1_2/test_tonemap_sequence.py

The test_tonemap_sequence test runs on LIMITED cameras in Android

12.

scene1_2/test_yuv_plus_raw.py

The test_yuv_plus_raw test runs on physical

hidden cameras in Android 12.

scene2_a/test_format_combos.py

The test_format_combos test runs on LIMITED cameras in Android

12.

scene3/test_flip_mirror.py

The test_flip_mirror test runs on LIMITED cameras in Android

12.

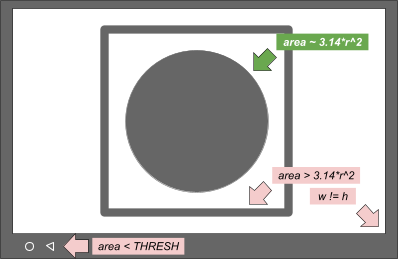

scene4/test_aspect_ratio_and_crop.py

Finding circles in scene4/test_aspect_ratio_and_crop.py was refactored in

Android 12.

Earlier Android versions used a method that involved finding a child contour (the circle) inside the parent contour (the square) with filters for size and color. Android 12 uses a method that involves finding all contours and then filtering by finding features that are the most circlish. To screen out spurious circles on the display, there is a minimum contour area required, and the contour of the circle must be black.

The contours and their selection criteria are shown in the following image.

Figure 1. Conceptual drawing of contours and selection criteria

The Android 12 method is simpler and works to resolve the issue with bounding box clipping in some display tablets. All circle candidates are logged for debugging purposes.

In Android 12, the crop test is run for FULL and LEVEL3

devices. Android 11 or lower versions skip the crop

test assertions for FULL devices.

The following table lists the assertions for

test_aspect_ratio_and_crop.py that correspond to a given device level and

first API level.

| Device level | First API level | Assertions |

|---|---|---|

| LIMITED | ALL | Aspect ratio FoV for 4:3, 16:9, 2:1 formats |

| FULL | < 31 | Aspect ratio FoV for 4:3, 16:9, 2:1 formats |

| FULL | ≥ 31 | Crop Aspect ratio FoV for 4:3, 16:9, 2:1 formats |

| LEVEL3 | ALL | Crop Aspect ratio FoV for 4:3, 16:9, 2:1 formats |

scene4/test_multi_camera_alignment.py

The method undo_zoom() for YUV captures in

scene4/test_multi_camera_alignment.py was refactored to account more

accurately for cropping on sensors that do not match the capture's aspect ratio.

Android 11 Python 2 code

zoom_ratio = min(1.0 * yuv_w / cr_w, 1.0 * yuv_h / cr_h)

circle[i]['x'] = cr['left'] + circle[i]['x'] / zoom_ratio

circle[i]['y'] = cr['top'] + circle[i]['y'] / zoom_ratio

circle[i]['r'] = circle[i]['r'] / zoom_ratio

Android 12 Python 3 code

yuv_aspect = yuv_w / yuv_h

relative_aspect = yuv_aspect / (cr_w/cr_h)

if relative_aspect > 1:

zoom_ratio = yuv_w / cr_w

yuv_x = 0

yuv_y = (cr_h - cr_w / yuv_aspect) / 2

else:

zoom_ratio = yuv_h / cr_h

yuv_x = (cr_w - cr_h * yuv_aspect) / 2

yuv_y = 0

circle['x'] = cr['left'] + yuv_x + circle['x'] / zoom_ratio

circle['y'] = cr['top'] + yuv_y + circle['y'] / zoom_ratio

circle['r'] = circle['r'] / zoom_ratio

sensor_fusion/test_sensor_fusion.py

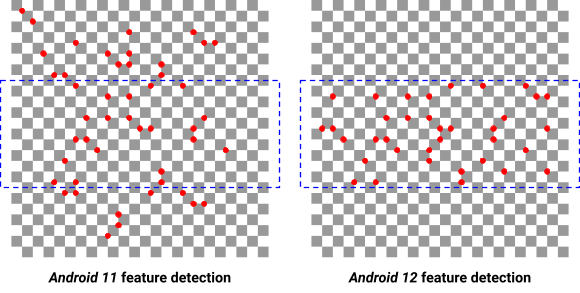

In Android 12, a method for detecting features in images is added for the sensor fusion test.

In versions lower than Android 12, the entire image is used to find the best 240 features which are then masked to the center 20% to avoid rolling shutter effects with the minimum feature requirement being 30 features.

If the features found by this method are insufficient, Android 12 masks the feature detection area to the center 20% first, and limits the maximum features to two times the minimum feature requirement.

The following image shows the difference between Android 11 and Android 12 feature detection. Raising the minimum feature requirement threshold results in the detection of poor quality features and negatively affects measurements.

Figure 2. Difference in feature detection between Android 11 and Android 12

New tests

scene0/test_solid_color_test_pattern.py

A new test, test_solid_color_test_pattern, is enabled for Android

12. This test is enabled for all cameras and is

described in the following table.

| Scene | Test name | First API level | Description |

|---|---|---|---|

| 0 | test_solid_color_test_pattern | 31 | Confirms solid color image output and image color programmability. |

Solid color test patterns must be enabled to support the camera privacy mode.

The test_solid_color_test_pattern test confirms solid color YUV image output

with the color defined by the pattern selected, and image color changes

according to specification.

Parameters

cameraPrivacyModeSupport: Determines whether the camera supports privacy mode.android.sensor.testPatternMode: Sets the test pattern mode. This test usesSOLID_COLOR.android.sensor.testPatternData: Sets the R, Gr, Gb, G test pattern values for the test pattern mode.

For a description of the solid color test pattern, see

SENSOR_TEST_PATTERN_MODE_SOLID_COLOR.

Method

YUV frames are captured for the parameters set and the image content

is validated. The test pattern is output directly from the image sensor,

so no particular scene is required. If PER_FRAME_CONTROL is

supported, a single YUV frame is captured for each setting tested. If

PER_FRAME_CONTROL isn't supported, four frames are captured with only the

last frame analyzed to maximize test coverage in LIMITED cameras.

YUV captures are set to fully saturated BLACK, WHITE, RED, GREEN, and

BLUE test patterns. As the test pattern definition is per the sensor Bayer

pattern, the color channels must be set for each color as shown in the

following table.

| Color | testPatternData (RGGB) |

|---|---|

| BLACK |

(0, 0, 0, 0)

|

| WHITE |

(1, 1, 1, 1)

|

| RED |

(1, 0, 0, 0)

|

| GREEN |

(0, 1, 1, 0)

|

| BLUE |

(0, 0, 0, 1)

|

Assertion table

The following table describes the test assertions for

test_solid_color_test_pattern.py.

| Camera First API level |

Camera type | Colors asserted |

|---|---|---|

| 31 | Bayer | BLACK, WHITE, RED, GREEN, BLUE |

| 31 | MONO | BLACK, WHITE |

| < 31 | Bayer/MONO | BLACK |

Performance class tests

scene2_c/test_camera_launch_perf_class.py

Verifies camera startup is less than 500 ms for both front and rear primary cameras with the scene2_c face scene.

scene2_c/test_jpeg_capture_perf_class.py

Verifies 1080p JPEG capture latency is less than 1 second for both front and rear primary cameras with the scene2_c face scene.