Android Automotive OS (AAOS) builds on the core Android audio stack to support the use cases for operating as the infotainment system in a vehicle. AAOS is responsible for infotainment sounds (that is media, navigation, and communications) but isn't directly responsible for chimes and warnings that have strict availability and timing requirements.

While AAOS provides signals and mechanisms to help the vehicle manage audio, in the end it is up to the vehicle to make the call as to what sounds should be played for the driver and passengers, ensuring safety critical sounds and regulatory sounds are properly heard without interruption.

Since AAOS leverages the Android audio stack, third party applications playing audio do not need to do anything different then they would in phones. The application's audio routing is automatically managed by AAOS as described in Audio policy configuration.

As Android manages the vehicle’s media experience, external media sources such as the radio tuner should be represented by apps, which can handle audio focus and media key events for the source.

Android sounds and streams

Automotive audio systems handle the following sounds and streams:

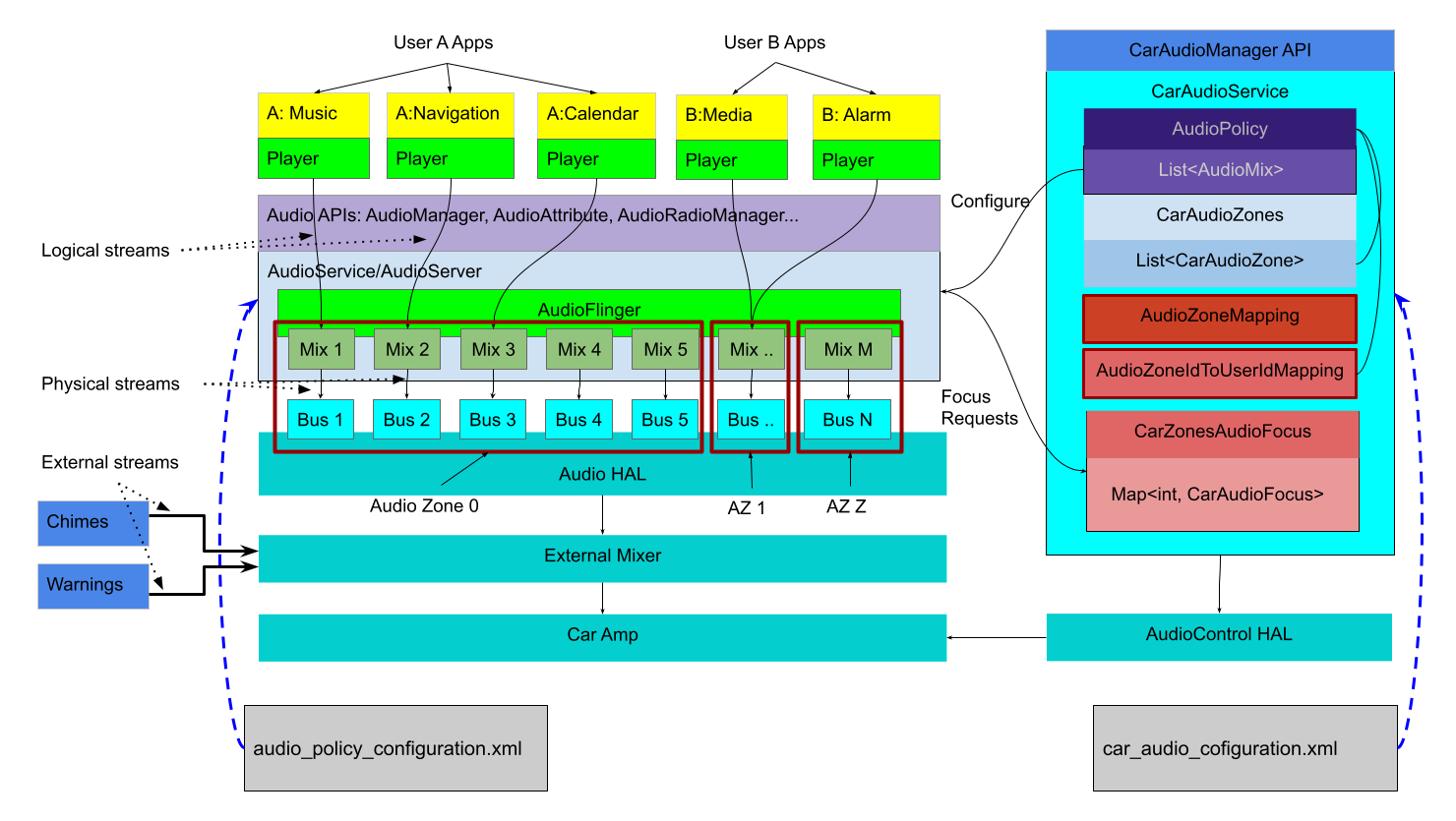

Figure 1. Stream-centric architecture diagram.

Android manages the sounds coming from Android apps, controlling those apps and routing their sounds to output devices at the HAL based on the type of sound:

Logical streams, known as sources in core audio nomenclature, are tagged with Audio attributes.

Physical streams, known as devices in core audio nomenclature, have no context information after mixing.

For reliability, external sounds (coming from independent sources, such as seatbelt warning chimes) are managed outside Android, below the HAL or even in separate hardware. System implementers must provide a mixer that accepts one or more streams of sound input from Android and then combines those streams in a suitable way with the external sound sources required by the vehicle. The Android Control HAL provides a different mechanism for sounds generated outside Android to communicate back to Android:

- Audio focus request

- Gain or volume limitations

- Gain and volume changes

The audio HAL implementation and external mixer are responsible for ensuring the safety-critical external sounds are heard and for mixing in the Android-provided streams and routing them to suitable speakers.

Android sounds

Apps may have one or more players that interact through the standard Android APIs (for example, AudioManager for focus control or MediaPlayer for streaming) to emit one or more logical streams of audio data. This data could be single channel mono or 7.1 surround, but is routed and treated as a single source. The app stream is associated with AudioAttributes that give the system hints about how the audio should be expressed.

The logical streams are sent through AudioService and routed to one (and only one) of the available physical output streams, each of which is the output of a mixer within AudioFlinger. After the audio attributes have been mixed down to a physical stream, they are no longer available.

Each physical stream is then delivered to the Audio HAL for rendering on the hardware. In automotive apps, rendering hardware can be local codecs (similar to mobile devices) or a remote processor across the vehicle's physical network. Either way, it's the job of the Audio HAL implementation to deliver the actual sample data and cause it to become audible.

External streams

Sound streams that shouldn't be routed through Android (for certification or timing reasons) may be sent directly to the external mixer. As of Android 11, the HAL is now able to request focus for these external sounds to inform Android such that it can take appropriate actions such as pausing media or preventing others from gaining focus.

If external streams are media sources that should interact with the sound environment Android is generating (for example, stop MP3 playback when an external tuner is turned on), those external streams should be represented by an Android app. Such an app would request Audio focus on behalf of the media source instead of the HAL, and would respond to focus notifications by starting and stopping the external source as necessary to fit into the Android focus policy.

The app is also responsible for handling media key events such as play and

pause. One suggested mechanism to control such external devices is

HwAudioSource. To learn more, see

Connect an input device in AAOS.

Output devices

At the Audio HAL level, the device type AUDIO_DEVICE_OUT_BUS provides a

generic output device for use in vehicle audio systems. The bus device supports

addressable ports (where each port is the end point for a physical stream) and

is expected to be the only supported output device type in a vehicle.

A system implementation can use one bus port for all Android sounds, in which case Android mixes everything together and delivers it as one stream. Alternatively, the HAL can provide one bus port for each CarAudioContext to allow concurrent delivery of any sound type. This makes it possible for the HAL implementation to mix and duck the different sounds as desired.

The assignment of audio contexts to output devices is done through the

car_audio_configuration.xml file. To learn more, see

Audio policy configuration.