在先前版本的 Exterior View System (EVS) 中,IEvsCameraStream 介面定義了單一回呼方法,用於「僅」提供擷取的影片影格。雖然這項簡化的 EVS 服務用戶端實作方式,讓用戶端難以識別任何串流事件,因此也無法妥善處理這些事件。為改善 EVS 開發體驗,AOSP 現已包含額外的回呼,可用於提交串流事件。

package android.hardware.automotive.evs@1.1; import @1.0::IEvsCameraStream; /** * Implemented on client side to receive asynchronous video frame deliveries. */ interface IEvsCameraStream extends @1.0::IEvsCameraStream { /** * Receives calls from the HAL each time a video frame is ready for inspection. * Buffer handles received by this method must be returned via calls to * IEvsCamera::doneWithFrame_1_1(). When the video stream is stopped via a call * to IEvsCamera::stopVideoStream(), this callback may continue to happen for * some time as the pipeline drains. Each frame must still be returned. * When the last frame in the stream has been delivered, STREAM_STOPPED * event must be delivered. No further frame deliveries may happen * thereafter. * * @param buffer a buffer descriptor of a delivered image frame. */ oneway deliverFrame_1_1(BufferDesc buffer); /** * Receives calls from the HAL each time an event happens. * * @param event EVS event with possible event information. */ oneway notify(EvsEvent event); };

這個方法會傳回 EvsEventDesc,其中包含三個欄位:

- 事件類型。

- 用來識別事件來源的字串。

- 4 個 32 位元字詞資料,用於包含可能的事件資訊。

/** * Structure that describes informative events occurred during EVS is streaming */ struct EvsEvent { /** * Type of an informative event */ EvsEventType aType; /** * Device identifier */ string deviceId; /** * Possible additional information */ uint32_t[4] payload; };

為避免 EVS 與其他 Android 圖形元件之間的圖形緩衝區說明出現差異,BufferDesc 已重新定義為使用從 android.hardware.graphics.common@1.2 介面匯入的 HardwareBuffer。HardwareBuffer 包含 HardwareBufferDescription,這是 Android NDK

AHardwareBuffer_Desc 的 HIDL 對應項目,並附帶緩衝區句柄。

/** * HIDL counterpart of AHardwareBuffer_Desc. * * An AHardwareBuffer_Desc object can be converted to and from a * HardwareBufferDescription object by memcpy(). * * @sa +ndk libnativewindow#AHardwareBuffer_Desc. */ typedef uint32_t[10] HardwareBufferDescription; /** * HIDL counterpart of AHardwareBuffer. * * AHardwareBuffer_createFromHandle() can be used to convert a HardwareBuffer * object to an AHardwareBuffer object. * * Conversely, AHardwareBuffer_getNativeHandle() can be used to extract a native * handle from an AHardwareBuffer object. Paired with AHardwareBuffer_Desc, * AHardwareBuffer_getNativeHandle() can be used to convert between * HardwareBuffer and AHardwareBuffer. * * @sa +ndk libnativewindow#AHardwareBuffer". */ struct HardwareBuffer { HardwareBufferDescription description; handle nativeHandle; } /** * Structure representing an image buffer through our APIs * * In addition to the handle to the graphics memory, need to retain * the properties of the buffer for easy reference and reconstruction of * an ANativeWindowBuffer object on the remote side of API calls. * Not least because OpenGL expect an ANativeWindowBuffer* for us as a * texture via eglCreateImageKHR(). */ struct BufferDesc { /** * HIDL counterpart of AHardwareBuffer_Desc. Please see * hardware/interfaces/graphics/common/1.2/types.hal for more details. */ HardwareBuffer buffer; /** * The size of a pixel in the units of bytes */ uint32_t pixelSize; /** * Opaque value from driver */ uint32_t bufferId; /** * Unique identifier of the physical camera device that produces this buffer. */ string deviceId; /** * Time that this buffer is being filled */ int64_t timestamp; /** * Frame metadata. This is opaque to EVS manager */ vec<uint8_t> metadata };

注意: HardwareBufferDescription 定義為十個 32 位元字的陣列。您可能要將其投放為 AHardwareBuffer_Desc 類型,並填入內容。

EvsEventDesc 是 enum EvsEventType 的結構體,其中列出多個串流事件和 32 位元字詞酬載,開發人員可在其中放置可能的其他資訊。舉例來說,開發人員可以為串流錯誤事件放置錯誤代碼。

/** * Types of informative streaming events */ enum EvsEventType : uint32_t { /** * Video stream is started */ STREAM_STARTED = 0, /** * Video stream is stopped */ STREAM_STOPPED, /** * Video frame is dropped */ FRAME_DROPPED, /** * Timeout happens */ TIMEOUT, /** * Camera parameter is changed; payload contains a changed parameter ID and * its value */ PARAMETER_CHANGED, /** * Master role has become available */ MASTER_RELEASED, };

影格傳送

有了新的 BufferDesc,IEvsCameraStream 也推出了新的回呼方法,可從服務實作項目接收影格和串流事件。

/** * Implemented on client side to receive asynchronous streaming event deliveries. */ interface IEvsCameraStream extends @1.0::IEvsCameraStream { /** * Receives calls from the HAL each time video frames are ready for inspection. * Buffer handles received by this method must be returned via calls to * IEvsCamera::doneWithFrame_1_1(). When the video stream is stopped via a call * to IEvsCamera::stopVideoStream(), this callback may continue to happen for * some time as the pipeline drains. Each frame must still be returned. * When the last frame in the stream has been delivered, STREAM_STOPPED * event must be delivered. No further frame deliveries may happen * thereafter. * * A camera device delivers the same number of frames as number of * backing physical camera devices; it means, a physical camera device * sends always a single frame and a logical camera device sends multiple * frames as many as the number of backing physical camera devices. * * @param buffer Buffer descriptors of delivered image frames. */ oneway deliverFrame_1_1(vec<BufferDesc> buffer); /** * Receives calls from the HAL each time an event happens. * * @param event EVS event with possible event information. */ oneway notify(EvsEventDesc event); };

較新的影格回呼方法旨在提供多個緩衝區描述元。因此,如果 EVS 相機實作項目管理多個來源,則可透過單一呼叫轉送多個影格。

此外,先前用於通知串流結束的通訊協定 (即傳送空值影格) 已淘汰,並改為 STREAM_STOPPED 事件。

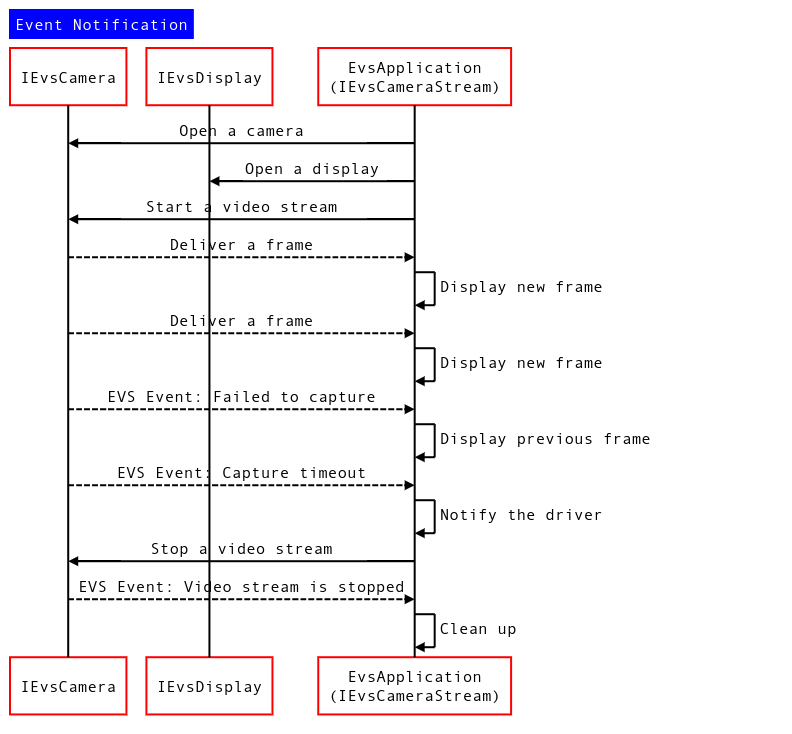

圖 1. 事件通知順序圖

使用事件和影格通知機制

找出用戶端實作的 IEvsCameraStream 版本

服務可以透過嘗試向下轉換,找出用戶端實作的傳入 IEvsCameraStream 介面版本:

using IEvsCameraStream_1_0 = ::android::hardware::automotive::evs::V1_0::IEvsCameraStream; using IEvsCameraStream_1_1 = ::android::hardware::automotive::evs::V1_1::IEvsCameraStream; Return<EvsResult> EvsV4lCamera::startVideoStream( const sp<IEvsCameraStream_1_0>& stream) { IEvsCameraStream_1_0 aStream = stream; // Try to downcast. This succeeds if the client implements // IEvsCameraStream v1.1. IEvsCameraStream_1_1 aStream_1_1 = IEvsCameraStream_1_1::castFrom(aStream).withDefault(nullptr); if (aStream_1_1 == nullptr) { ALOGI("Start a stream for v1.0 client."); } else { ALOGI("Start a stream for v1.1 client."); } // Start a video stream ... }

notify() 回呼

EvsEvent 會透過 notify() 回呼傳遞,用戶端接著可根據判別碼識別其類型,如下所示:

Return<void> StreamHandler::notify(const EvsEvent& event) { ALOGD("Received an event id: %u", event.aType); // Handle each received event. switch(event.aType) { case EvsEventType::ERROR: // Do something to handle an error ... break; [More cases] } return Void(); }

使用 BufferDesc

AHardwareBuffer_Desc 是 Android NDK 的資料類型,用來表示可繫結至 EGL/OpenGL 和 Vulkan 基本元素的原生硬體緩衝區。它包含先前 EVS BufferDesc 中的大部分緩衝區中繼資料,因此會在新的 BufferDesc 定義中取代該資料。不過,由於此陣列在 HIDL 介面中定義為陣列,因此無法直接為成員變數建立索引。您可以將陣列轉換為 AHardwareBuffer_Desc 類型,如下所示:

BufferDesc bufDesc = {}; AHardwareBuffer_Desc* pDesc = reinterpret_cast<AHardwareBuffer_Desc *>(&bufDesc.buffer.description); pDesc->width = mVideo.getWidth(); pDesc->height = mVideo.getHeight(); pDesc->layers = 1; pDesc->format = mFormat; pDesc->usage = mUsage; pDesc->stride = mStride; bufDesc_1_1.buffer.nativeHandle = mBuffers[idx].handle; bufDesc_1_1.bufferId = idx;