Android's camera hardware abstraction layer (HAL) connects the higher level camera framework APIs in android.hardware.camera2 to your underlying camera driver and hardware. Starting with Android 13, camera HAL interface development uses AIDL. Android 8.0 introduced Treble, switching the Camera HAL API to a stable interface defined by the HAL interface description language (HIDL). If you've previously developed a camera HAL module and driver for Android 7.0 and lower, be aware of significant changes in the camera pipeline.

AIDL camera HAL

For devices running Android 13 or higher, the camera framework includes support for AIDL camera HALs. The camera framework also supports HIDL camera HALs, however camera features added in Android 13 or higher are available only through the AIDL camera HAL interfaces. To implement such features on devices upgrading to Android 13 or higher, device manufacturers must migrate their HAL process from using HIDL camera interfaces to AIDL camera interfaces.

To learn about the advantages of AIDL, see AIDL for HALs.

Implement AIDL camera HAL

For a reference implementation of an AIDL camera HAL, see

hardware/google/camera/common/hal/aidl_service/.

The AIDL camera HAL specifications are in the following locations:

- Camera provider:

hardware/interfaces/camera/provider/aidl/ - Camera device:

hardware/interfaces/camera/device/aidl/ - Camera metadata:

hardware/interfaces/camera/metadata/aidl/ - Common data types:

hardware/interfaces/camera/common/aidl/

For devices migrating to AIDL, device manufacturers might need to modify the Android SELinux policy (sepolicy) and RC files depending on the code structure.

Validate AIDL camera HAL

To test your AIDL camera HAL implementation, ensure that the device passes all

CTS and VTS tests. Android 13 introduces the AIDL VTS

test,

VtsAidlHalCameraProvider_TargetTest.cpp.

Camera HAL3 features

The aim of the Android Camera API redesign is to substantially increase the ability of apps to control the camera subsystem on Android devices while reorganizing the API to make it more efficient and maintainable. The additional control makes it easier to build high-quality camera apps on Android devices that can operate reliably across multiple products while still using device-specific algorithms whenever possible to maximize quality and performance.

Version 3 of the camera subsystem structures the operation modes into a single unified view, which can be used to implement any of the previous modes and several others, such as burst mode. This results in better user control for focus and exposure and more post-processing, such as noise reduction, contrast and sharpening. Further, this simplified view makes it easier for application developers to use the camera's various functions.

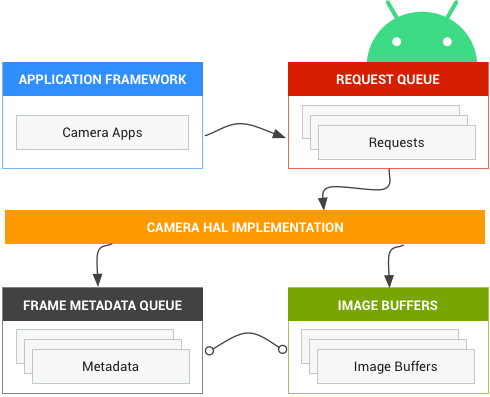

The API models the camera subsystem as a pipeline that converts incoming requests for frame captures into frames, on a 1:1 basis. The requests encapsulate all configuration information about the capture and processing of a frame. This includes resolution and pixel format; manual sensor, lens and flash control; 3A operating modes; RAW->YUV processing control; statistics generation; and so on.

In simple terms, the application framework requests a frame from the camera subsystem, and the camera subsystem returns results to an output stream. In addition, metadata that contains information such as color spaces and lens shading is generated for each set of results. You can think of camera version 3 as a pipeline to camera version 1's one-way stream. It converts each capture request into one image captured by the sensor, which is processed into:

- A result object with metadata about the capture.

- One to N buffers of image data, each into its own destination surface.

The set of possible output surfaces is preconfigured:

- Each surface is a destination for a stream of image buffers of a fixed resolution.

- Only a small number of surfaces can be configured as outputs at once (~3).

A request contains all desired capture settings and the list of output

surfaces to push image buffers into for this request (out of the total

configured set). A request can be one-shot (with capture()), or it

may be repeated indefinitely (with setRepeatingRequest()). Captures

have priority over repeating requests.

Figure 1. Camera core operation model

Camera HAL1 overview

Version 1 of the camera subsystem was designed as a black box with high-level controls and the following three operating modes:

- Preview

- Video Record

- Still Capture

Each mode has slightly different and overlapping capabilities. This made it hard to implement new features such as burst mode, which falls between two of the operating modes.

Figure 2. Camera components

Android 7.0 continues to support camera HAL1 as many devices still rely on it. In addition, the Android camera service supports implementing both HALs (1 and 3), which is useful when you want to support a less-capable front-facing camera with camera HAL1 and a more advanced back-facing camera with camera HAL3.

There is a single camera HAL module (with its own version number), which lists multiple independent camera devices that each have their own version number. Camera module 2 or newer is required to support devices 2 or newer, and such camera modules can have a mix of camera device versions (this is what we mean when we say Android supports implementing both HALs).