Android 13 introduces a standard way for OEMs to support spatial audio and head tracking without the need for vendor-specific customizations or SDKs.

Spatial audio is a technology used to create a sound field surrounding the listener. Spatial audio enables users to perceive channels and individual sounds in positions that differ from the physical positions of the transducers of the audio device used for playback. For instance, spatial audio offers the user the ability to listen to a multichannel soundtrack over headphones. Using spatial audio, headphone users can perceive dialogue in front of them, and surround effects behind them, despite having only two transducers for playback.

Head tracking helps the user understand the nature of the spatialized sound stage being simulated around their head. This experience is effective only when the latency is low, where latency is measured as the time between when the user moves their head and the time they hear the virtual speaker position moving accordingly.

Android 13 optimizes for spatial audio and head tracking by offering spatial audio processing at the lowest possible level in the audio pipeline to obtain the lowest possible latency.

Architecture

The modified Android audio framework and API in Android 13 facilitates the adoption of spatial audio technology across the ecosystem.

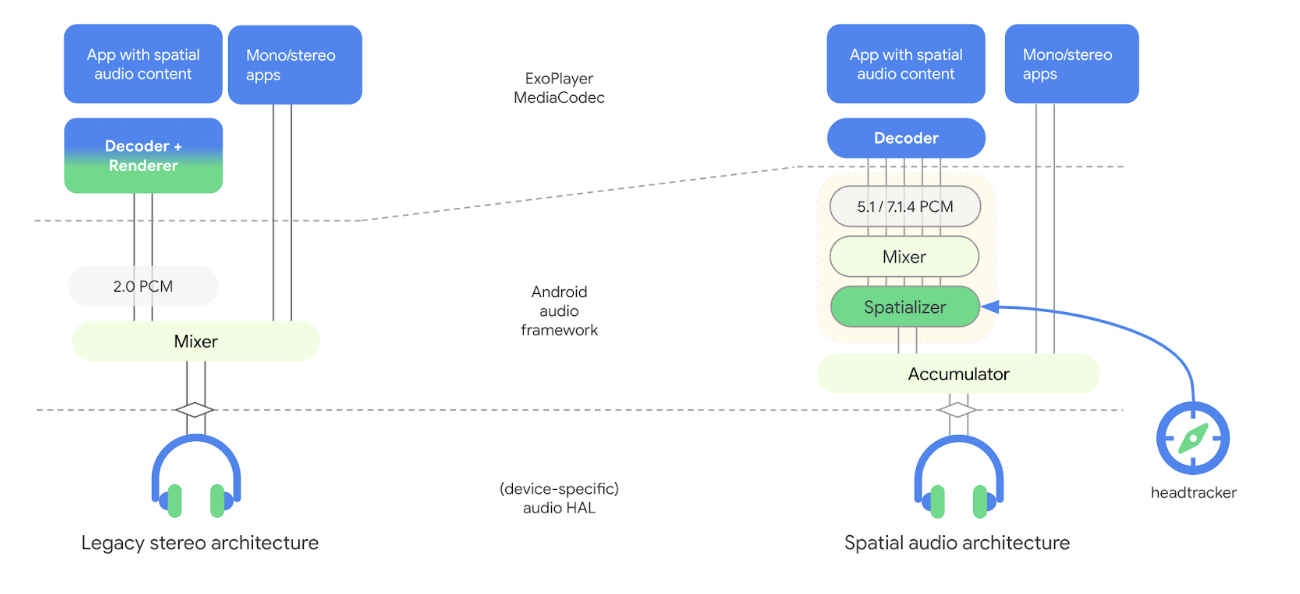

The following figure illustrates the spatial audio related changes made to the audio pipeline architecture with Android 13:

Figure 1. Audio pipeline architecture with spatializer

In the new model, the spatializer is part of the audio framework and is decoupled from the decoder. The spatializer takes in mixed audio content and renders a stereo stream to the Audio HAL. Decoupling the spatializer from the decoder enables OEMs to choose different vendors for the decoder and spatializer and to achieve the desired round-trip latency for head tracking. This new model also includes hooks to the sensor framework for head tracking.

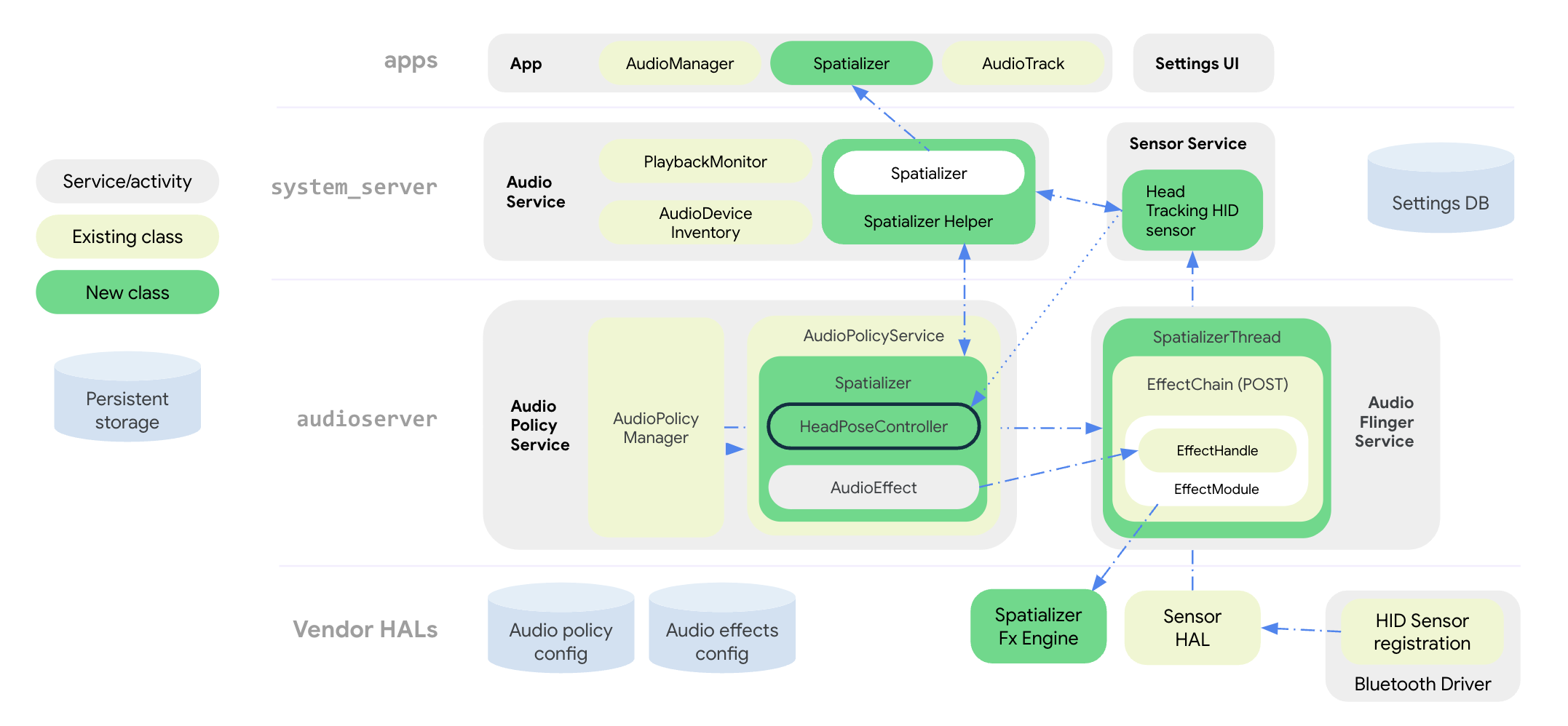

The following figure illustrates the system architecture of the audio framework for the spatializer and head tracking effect:

Figure 2. System architecture with spatializer and head tracking

All spatial audio APIs are grouped in the public

Spatializer class at the app level. The SpatializerHelper

class in audio service interfaces with the System UI components to manage

spatializer-related functionality based on the platform and connected device

capabilities. The new Spatializer class in the audio policy service creates and controls the spatial

audio graph needed for multichannel mixing and spatialization based on

capabilities expressed by the OEM,

the connected devices, and active use cases. A new mixer class SpatializerThread mixes multichannel tracks and feeds the resulting mix to a post-processing FX

engine that renders a stereo output to the Audio HAL. For head tracking, the

SpatializerPoseController class groups functions related to head tracking, to

interface to the sensor stack and to merge and filter sensor signals that are

fed to the effect engine. Head tracking sensor data is carried over the HID protocol

from the Bluetooth driver.

Changes to the Android 13 audio pipeline architecture improve on the following:

- Lowering latency between the spatializer and headphones.

- Providing unified APIs to serve app developers.

- Controlling the head tracking state through system APIs.

- Discovering head tracking sensors and associating them with active audio devices.

- Merging signals from various sensors and computing the head pose that can be consumed by the spatializer effect engine.

Functions like bias compensation, stillness detection, and rate limitation can be implemented using the head tracking utility library.

Spatial audio APIs

Android 13 offers spatial audio system and developer APIs.

OEMs can adapt app behavior based on feature availability and enabled state, which is set by system APIs. Apps can also configure audio attributes to disable spatial audio for aesthetic reasons or to indicate that the audio stream is already processed for spatial audio.

For developer-facing APIs, see Spatializer.

OEMs can use system APIs to implement the Sounds and Bluetooth settings UI, which enables the user to control the state of the spatial audio and the head tracking feature for their device. The user can enable or disable spatial audio for the speaker and wired headphones in the Sounds settings UI. The spatial audio setting for the speaker is available only if the spatializer effect implementation supports transaural mode.

The user can also enable or disable spatial audio and head tracking in the Bluetooth device setting for each device. The head tracking setting is available only if the Bluetooth headset exposes a head tracking sensor.

The default settings for spatial audio are always ON if the feature is

supported. See Spatializer.java

for a complete list of system APIs.

The new head tracking sensor type Sensor.TYPE_HEAD_TRACKER is added to the

Sensor framework and exposed by the Sensor HAL as a dynamic sensor over

Bluetooth or USB.

Integrate spatial audio

Along with implementing the spatializer effect engine, OEMs must configure their platform for spatial audio support.

Requirements

The following requirements must be met in order to integrate spatial audio:

- The Audio HAL and audio DSP must support a dedicated output path for spatial audio.

- For spatial audio with head tracking, headphones must have built-in head tracker sensors.

- The implementation must conform to the proposed standard for head tracking over the HID protocol from a Bluetooth headset to a phone.

- Audio HAL v7.1 is needed for spatial audio support.

Integrate spatial audio using the following steps:

Declare spatial audio support in your

device.mkfile, as follows:PRODUCT_PROPERTY_OVERRIDES += \ ro.audio.spatializer_enabled=trueThis causes

AudioServiceto initialize spatializer support.Declare dedicated output for the spatial audio mix in the

audio_policy_configuration.xml, as follows:<audioPolicyConfiguration> <modules> <module> <mixPorts> <mixPort name="spatializer" role="source" flags="AUDIO_OUTPUT_FLAG_SPATIALIZER"> <profile name="sa" format="AUDIO_FORMAT_PCM_FLOAT" samplingRates="48000" channelMasks="AUDIO_CHANNEL_OUT_STEREO"/>Declare the spatializer effect library in

audio_effects.xml, as follows:<audio_effects_conf> <libraries> <library name="spatializer_lib" path="libMySpatializer.so"/> … </libraries> <effects> <effect name="spatializer" library="spatializer_lib" uuid="myunique-uuid-formy-spatializereffect"/>Vendors implementing the spatializer effect must conform to the following:

- Basic configuration and control identical to other effects in Effect HAL.

Specific parameters needed for the framework to discover supported capabilities and configuration, such as:

SPATIALIZER_PARAM_SUPPORTED_LEVELSSPATIALIZER_PARAM_LEVELSPATIALIZER_PARAM_HEADTRACKING_SUPPORTEDSPATIALIZER_PARAM_HEADTRACKING_MODESPATIALIZER_PARAM_SUPPORTED_CHANNEL_MASKSSPATIALIZER_PARAM_SUPPORTED_SPATIALIZATION_MODESSPATIALIZER_PARAM_HEAD_TO_STAGE

See

effect_spatializer.hfor more information.

Recommendations

We recommend that OEMs use the following guidelines during implementation:

- Use LE audio when available to ease interoperability and achieve latency goals.

- Round-trip latency, from sensor movement detection to audio received by the headphones, must be less than 150 ms for good UX.

- For Bluetooth (BT) Classic with Advanced Audio Distribution Profile (A2DP):

Validation

To validate functionality of the spatial audio feature, use the CTS tests

available in SpatializerTest.java.

Poor implementation of the spatialization or head tracking algorithms can cause failure to meet the round-trip latency recommendation as listed in the Recommendations.