Android 13 introduces support for spatial audio by providing APIs that let app developers discover if the current combination of phone implementation, connected headset, and user settings allows playback of multichannel audio content in an immersive manner.

OEMs can provide a spatializer audio effect with support for head tracking with the level of performance and latency required, using the new audio pipeline architecture and sensor framework integration. The HID protocol specifies how to attach a head-tracking device over Bluetooth and make it available as a HID device through the Android sensor framework. See Spatial Audio and Head Tracking for more requirements and validation.

The guidelines on this page apply to a spatial audio solution that adopts the new spatial audio APIs and audio architecture with an Android phone running Android 13 and higher and compatible headsets with head-tracking sensor.

Guidelines for implementation of dynamic and static spatial audio modes

Static spatial audio doesn't require head tracking, so specific functionality isn't required in the headset. All wired and wireless headsets can support static spatial audio.

API implementation

OEMs MUST implement the Spatializer

class introduced in Android 12. The implementation must pass the CTS tests

introduced for the

Spatializer

class.

A robust API implementation ensures that app developers, in particular media streaming services, can rely on consistent behavior across the ecosystem and pick the best content according to device capabilities, current rendering context, and user choices.

User interface

After implementing the Spatializer

class, validate that your UI has the following behavior:

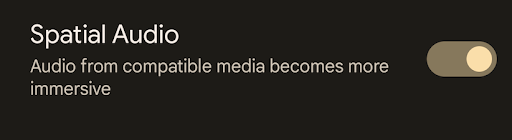

When the spatial audio capable headset is paired, the Bluetooth device settings for this headset displays a Spatial Audio toggle:

Figure 1. Spatial audio setting.

The settings are available when the headset is disconnected.

The default state for spatial audio after initially pairing the headset is set to enabled.

The user-selected state, whether enabled or disabled, persists a phone reboot or unpairing and pairing the headset.

Functional behavior

Audio formats

The following audio formats MUST be rendered by the spatializer effect when spatial audio is enabled and the rendering device is a wired or Bluetooth headset:

- AAC, 5.1 channels

- Raw PCM, 5.1 channels

For a better user experience, we strongly recommend supporting the following formats/channel configurations:

- Dolby Digital Plus

- 5.1.2, 7.1, 7.1.2, 7.1.4 channels

Stereo content playback

Stereo content must not be rendered through the spatializer effect engine, even if spatial audio is enabled. If an implementation allows for stereo content spatialization, it must present a custom UI that lets the user turn this feature on or off easily. When spatial audio is enabled, it must be possible to transition between playback of spatialized multichannel content to nonspatialized stereo content without requiring any changes in user settings or headset reconnection or reconfiguration. The transition between spatial audio content and stereo content must occur with minimal audio disruption.

Use case transitions and concurrency

Handle special use cases as follows:

- Notifications must be mixed with the spatial audio content in the same manner as they are with nonspatial audio content.

- Ringtones must be allowed to be mixed with spatial audio content. However, by default, the audio focus mechanism pauses the spatial audio content when there is ringtone.

- When answering or placing a phone call or video conference, the spatial audio playback must pause. Spatial audio playback must resume with the same spatial audio settings when the call ends. Reconfiguration of an audio path to switch from spatial audio mode to conversational mode must happen quickly and seamlessly enough so it doesn't affect the call experience.

Rendering over speakers

Support for audio spatialization over speakers, or transaural mode, isn't required.

Guidelines for implementation of head tracking

This section focuses on dynamic spatial audio, which has specific headset requirements.

User interface

Upon implementation and pairing of the spatial audio capable headset, validate that your UI has the following behavior:

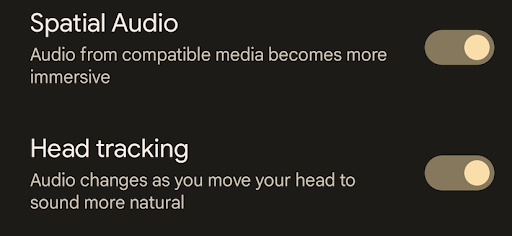

In the Bluetooth device settings, when the Spatial Audio setting for the headset is enabled, a Head tracking setting appears under Spatial Audio:

Figure 2. Spatial audio and head-tracking setting.

The head-tracking setting is NOT visible when spatial audio is disabled.

The default state for head tracking after initially pairing the headset is set to enabled.

The user selected state, whether enabled or disabled, must persist through a phone reboot or unpairing and pairing the headset.

Functional behavior

Head pose reporting

- Head pose information, in x, y, and z coordinates, sent from the headset to the Android device, must reflect the user's head movements quickly and accurately.

- Head pose reporting over the Bluetooth link must follow the protocol defined over HID.

- The headset must send the head-tracking information to the Android phone only when the user enables Head tracking in the Bluetooth device setting UI.

Performance

Latency

Head-tracking latency is defined as the time it takes from the head motion captured by the inertial measurement unit (IMU) to the headphone transducers’ detection of the change in sound caused by this motion. Head-tracking latency must not exceed 150 ms.

Head pose reporting rate

When head tracking is active, the headset must report the head pose on a recommended periodic basis of approximately 20 ms. To avoid triggering the stale input detection logic on the phone during a transmission jitter of the Bluetooth, the maximum time between two updates must not exceed 40 ms.

Power optimization

To optimize power, we recommend that the implementation uses the Bluetooth codec switching and latency mode selection mechanisms provided by the audio HAL and Bluetooth audio HAL interfaces.

The AOSP implementations of the audio framework and Bluetooth stack already support the signals to control codec switching. If the OEM’s implementation uses the primary audio HAL for Bluetooth audio, known as codec offload mode, the OEM must ensure that the audio HAL relays those signals between the audio HAL and the Bluetooth stack.

Codec switching

When dynamic spatial audio and head tracking are on, use a low-latency codec, such as Opus. When playing nonspatial audio content, use a low-power codec, such as Advanced Audio Coding (AAC).

Follow these rules during codec switching:

- Track only the activity on the following audio HAL output streams:

- Dedicated spatializer output

- Media specific streams, such as deep buffer or compressed offload playback

When all relevant streams are idle and the spatializer stream starts, start the Bluetooth stream with

isLowLatencyset totrueto specify a low-latency codec.When all relevant streams are idle and a media stream starts, start the Bluetooth stream with

isLowLatencyset tofalseto specify a low-power codec.If a media stream is active and the spatializer stream starts, restart the Bluetooth stream with

isLowLatencyset totrue.

On the headset side, the headset must support both the low-latency and low-power decoders and implement the standard codec selection protocol.

Latency mode adjustment

Latency mode adjustment occurs when the low-latency codec is selected.

Based on whether head tracking is on or off, latency mode adjustment uses available mechanisms to reduce or increase the latency to reach the best compromise between latency, power, and audio quality. When spatial audio is enabled and head tracking is enabled, the low-latency mode is chosen. When spatial audio is enabled and head tracking is disabled, the free-latency mode is selected. Latency adjustment provides significant power savings and increased robustness of the Bluetooth audio link when only static spatial audio is requested. The most common latency adjustment mechanism is the reduction or extension of the jitter buffer size in the Bluetooth headset.

See Head tracking over LE audio for latency mode adjustments for LE audio.