Android 9 introduced API support for multi-camera devices through a new logical camera device composed of two or more physical camera devices pointing in the same direction. The logical camera device is exposed as a single CameraDevice/CaptureSession to an app allowing for interaction with HAL-integrated multi-camera features. Apps can optionally access and control underlying physical camera streams, metadata, and controls.

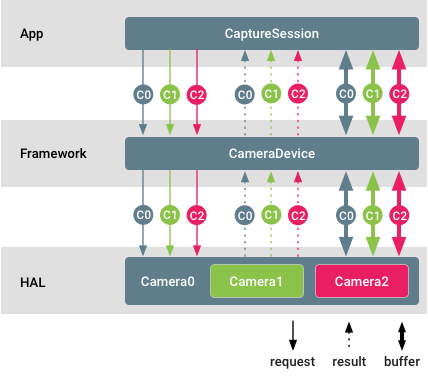

Figure 1. Multi-camera support

In this diagram, different camera IDs are color coded. The app can stream raw buffers from each physical camera at the same time. It is also possible to set separate controls and receive separate metadata from different physical cameras.

Examples and sources

Multi-camera devices must be advertised with the logical multi-camera capability.

Camera clients can query the camera ID of the physical devices a particular

logical camera is made of by calling

getPhysicalCameraIds().

The IDs returned as part of the result are then used to control physical devices

individually through

setPhysicalCameraId().

The results from such individual requests can be queried from the complete

result by invoking

getPhysicalCameraResults().

Individual physical camera requests may support only a limited subset of

parameters. To receive a list of the supported parameters, developers can call

getAvailablePhysicalCameraRequestKeys().

Physical camera streams are supported only for non-reprocessing requests and only for monochrome and bayer sensors.

Implementation

Support checklist

To add logical multi-camera devices on the HAL side:

- Add a

ANDROID_REQUEST_AVAILABLE_CAPABILITIES_LOGICAL_MULTI_CAMERAcapability for any logical camera device backed by two or more physical cameras that are also exposed to an app. - Populate the static

ANDROID_LOGICAL_MULTI_CAMERA_PHYSICAL_IDSmetadata field with a list of physical camera IDs. - Populate the depth-related static metadata required to correlate between

physical camera streams' pixels:

ANDROID_LENS_POSE_ROTATION,ANDROID_LENS_POSE_TRANSLATION,ANDROID_LENS_INTRINSIC_CALIBRATION,ANDROID_LENS_DISTORTION,ANDROID_LENS_POSE_REFERENCE. Set the static

ANDROID_LOGICAL_MULTI_CAMERA_SENSOR_SYNC_TYPEmetadata field to:ANDROID_LOGICAL_MULTI_CAMERA_SENSOR_SYNC_TYPE_APPROXIMATE: For sensors in main-main mode, no hardware shutter/exposure sync.ANDROID_LOGICAL_MULTI_CAMERA_SENSOR_SYNC_TYPE_CALIBRATED: For sensors in main-secondary mode, hardware shutter/exposure sync.

Populate

ANDROID_REQUEST_AVAILABLE_PHYSICAL_CAMERA_REQUEST_KEYSwith a list of supported parameters for individual physical cameras. The list can be empty if the logical device doesn't support individual requests.If individual requests are supported, process and apply the individual

physicalCameraSettingsthat can arrive as part of capture requests and append the individualphysicalCameraMetadataaccordingly.For Camera HAL device versions 3.5 (introduced in Android 10) or higher, populate the

ANDROID_LOGICAL_MULTI_CAMERA_ACTIVE_PHYSICAL_IDresult key using the ID of the current active physical camera backing the logical camera.

For devices running Android 9, camera devices must support replacing one logical YUV/RAW stream with physical streams of the same size (doesn't apply to RAW streams) and the same format from two physical cameras. This doesn't apply to devices running Android 10.

For devices running Android 10 where the

camera HAL device version is

3.5

or higher, the camera device must support

isStreamCombinationSupported

for apps to query whether a particular stream combination containing

physical streams is supported.

Stream configuration map

For a logical camera, the mandatory stream combinations for the camera device of

a certain hardware level is the same as what's required in

CameraDevice.createCaptureSession.

All of the streams in the stream configuration map must be logical streams.

For a logical camera device supporting RAW capability with physical sub-cameras of different sizes, if an app configures a logical RAW stream, the logical camera device must not switch to physical sub-cameras with different sensor sizes. This ensures that existing RAW capture apps don't break.

To take advantage of HAL-implemented optical zoom by switching between physical sub-cameras during RAW capture, apps must configure physical sub-camera streams instead of a logical RAW stream.

Guaranteed stream combination

Both the logical camera and its underlying physical cameras must guarantee the mandatory stream combinations required for their device levels.

A logical camera device should operate in the same way as a physical camera device based on its hardware level and capabilities. It's recommended that its feature set is a superset of that of individual physical cameras.

On devices running Android 9, for each guaranteed stream combination, the logical camera must support:

Replacing one logical YUV_420_888 or raw stream with two physical streams of the same size and format, each from a separate physical camera, given that the size and format are supported by the physical cameras.

Adding two raw streams, one from each physical camera, if the logical camera doesn't advertise RAW capability, but the underlying physical cameras do. This usually occurs when the physical cameras have different sensor sizes.

Using physical streams in place of a logical stream of the same size and format. This must not slow down the frame rate of the capture when the minimum frame duration of the physical and logical streams are the same.

Performance and power considerations

Performance:

- Configuring and streaming physical streams may slow down the logical camera's capture rate due to resource constraints.

- Applying physical camera settings may slow down the capture rate if the underlying cameras are put into different frame rates.

Power:

- HAL's power optimization continues to work in the default case.

- Configuring or requesting physical streams may override HAL's internal power optimization and incur more power use.

Customization

You can customize your device implementation in the following ways.

- The fused output of the logical camera device depends entirely on the HAL implementation. The decision on how fused logical streams are derived from the physical cameras is transparent to the app and Android camera framework.

- Individual physical requests and results can be optionally supported. The set of available parameters in such requests is also entirely dependent on the specific HAL implementation.

- From Android 10, the HAL can reduce the number of

cameras that can be directly opened by an app by electing not to

advertise some or all PHYSICAL_IDs in

getCameraIdList. CallinggetPhysicalCameraCharacteristicsmust then return the characteristics of the physical camera.

Validation

Logical multi-camera devices must pass camera CTS like any other regular camera.

The test cases that target this type of device can be found in the

LogicalCameraDeviceTest

module.

These three ITS tests target multi-camera systems to facilitate the proper fusing of images:

scene1/test_multi_camera_match.pyscene4/test_multi_camera_alignment.pysensor_fusion/test_multi_camera_frame_sync.py

The scene 1 and scene 4 tests run with the

ITS-in-a-box test

rig. The test_multi_camera_match test asserts that the brightness of the

center of the images match when the two cameras are both enabled. The

test_multi_camera_alignment test asserts that camera spacings, orientations,

and distortion parameters are properly loaded. If the multi-camera system

includes a Wide FoV camera (>90o), the rev2 version of the ITS box is required.

Sensor_fusion is a second test rig that enables repeated, prescribed phone

motion and asserts that the gyroscope and image sensor timestamps match and that

the multi-camera frames are in sync.

All boxes are available through AcuSpec, Inc. (www.acuspecinc.com, fred@acuspecinc.com) and MYWAY Manufacturing (www.myway.tw, sales@myway.tw). Additionally, the rev1 ITS box can be purchased through West-Mark (www.west-mark.com, dgoodman@west-mark.com).

Best practices

To fully take advantage of features enabled by multi-camera while maintaining app compatibility, follow these best practices when implementing a logical multi-camera device:

- (Android 10 or higher) Hide physical sub-cameras from

getCameraIdList. This reduces the number of cameras that can be directly opened by apps, eliminating the need for apps to have complex camera selection logic. - (Android 11 or higher) For a logical multi-camera

device supporting optical zoom, implement the

ANDROID_CONTROL_ZOOM_RATIOAPI, and useANDROID_SCALER_CROP_REGIONfor aspect ratio cropping only.ANDROID_CONTROL_ZOOM_RATIOenables the device to zoom out and maintain better precision. In this case, the HAL must adjust the coordinates system ofANDROID_SCALER_CROP_REGION,ANDROID_CONTROL_AE_REGIONS,ANDROID_CONTROL_AWB_REGIONS,ANDROID_CONTROL_AF_REGIONS,ANDROID_STATISTICS_FACE_RECTANGLES, andANDROID_STATISTICS_FACE_LANDMARKSto treat the post-zoom field of view as the sensor active array. For more information on howANDROID_SCALER_CROP_REGIONworks together withANDROID_CONTROL_ZOOM_RATIO, seecamera3_crop_reprocess#cropping. - For multi-camera devices with physical cameras that have different

capabilities, make sure the device advertises support for a certain value

or range for a control only if the whole zoom range supports the value

or range. For example, if the logical camera is composed of an ultrawide,

a wide, and a telephoto camera, do the following:

- If the active array sizes of the physical cameras are different, the

camera HAL must do the mapping from the physical cameras’ active arrays to

the logical camera active array for

ANDROID_SCALER_CROP_REGION,ANDROID_CONTROL_AE_REGIONS,ANDROID_CONTROL_AWB_REGIONS,ANDROID_CONTROL_AF_REGIONS,ANDROID_STATISTICS_FACE_RECTANGLES, andANDROID_STATISTICS_FACE_LANDMARKSso that from the app’s perspective, the coordinate system is the logical camera’s active array size. - If the wide and telephoto cameras support autofocus, but the ultrawide camera is fixed focus, make sure the logical camera advertises autofocus support. The HAL must simulate an autofocus state machine for the ultrawide camera so that when the app zooms out to the ultrawide lens, the fact that the underlying physical camera is fixed focus is transparent to the app, and the autofocus state machines for the supported AF modes work as expected.

- If the wide and telephoto cameras support 4K @ 60 fps, and the

ultrawide camera only supports 4K @ 30 fps, or 1080p @ 60 fps, but

not 4K @ 60 fps, make sure the logical camera doesn't advertise 4k @

60 fps in its supported stream configurations. This guarantees the

integrity of the logical camera capabilities, ensuring that the app won't

run into the issue of not achieving 4k @ 60 fps at a

ANDROID_CONTROL_ZOOM_RATIOvalue of less than 1.

- If the active array sizes of the physical cameras are different, the

camera HAL must do the mapping from the physical cameras’ active arrays to

the logical camera active array for

- Staring from Android 10, a logical multi-camera

isn't required to support stream combinations that include physical streams.

If the HAL supports a combination with physical streams:

- (Android 11 or higher) To better handle use cases such as depth from stereo and motion tracking, make the field of view of the physical stream outputs as large as can be achieved by the hardware. However, if a physical stream and a logical stream originate from the same physical camera, hardware limitations might force the field of view of the physical stream to be the same as the logical stream.

- To address the memory pressure caused by multiple physical streams,

make sure apps use

discardFreeBuffersto deallocate the free buffers (buffers that are released by the consumer, but not yet dequeued by the producer) if a physical stream is expected to be idle for a period of time. - If physical streams from different physical cameras aren't typically

attached to the same request, make sure apps use

surface groupso that one buffer queue is used to back two app-facing surfaces, reducing memory consumption.