SurfaceTexture 是介面和 OpenGL ES (GLES) 紋理的組合。SurfaceTexture 執行個體用於提供輸出至 GLES 紋理的表面。

SurfaceTexture 包含應用程式為消費者的 BufferQueue

執行個體。當製作工具將新緩衝區排入佇列時,onFrameAvailable() 回呼會通知應用程式。接著,應用程式會呼叫 updateTexImage(),釋放先前保留的緩衝區,從佇列取得新緩衝區,並發出 EGL 呼叫,讓緩衝區以外部紋理的形式供 GLES 使用。

外部 GLES 紋理

外部 GLES 紋理 (GL_TEXTURE_EXTERNAL_OES) 與標準 GLES 紋理 (GL_TEXTURE_2D) 的差異如下:

- 外部紋理會直接從

BufferQueue收到的資料算繪紋理多邊形。 - 外部紋理算繪器與標準 GLES 紋理算繪器的設定方式不同。

- 外部紋理無法執行所有標準 GLES 紋理活動。

外部紋理的主要優點是能夠直接從 BufferQueue 資料算繪。SurfaceTexture 執行個體會將消費者使用情形標記設為 GRALLOC_USAGE_HW_TEXTURE,以便為外部紋理建立 BufferQueue 執行個體,確認緩衝區中的資料可由 GLES 辨識。

由於 SurfaceTexture 執行個體會與 EGL 情境互動,因此應用程式只能在擁有紋理的 EGL 情境位於呼叫執行緒時,呼叫其方法。詳情請參閱

SurfaceTexture 類別說明文件。

時間戳記和轉換

SurfaceTexture 執行個體包含 getTimeStamp() 方法 (用於擷取時間戳記) 和 getTransformMatrix() 方法 (用於擷取轉換矩陣)。呼叫 updateTexImage() 會同時設定時間戳記和轉換矩陣。BufferQueue 傳遞的每個緩衝區都包含轉換參數和時間戳記。

轉換參數有助於提升效率。在某些情況下,來源資料的方向可能不適合消費者。請勿先旋轉資料再傳送給消費者,而是以正確方向傳送資料,並進行修正轉換。使用資料時,轉換矩陣可以與其他轉換合併,盡量減少額外負擔。

時間戳記適用於時間相關的緩衝區來源。舉例來說,當 setPreviewTexture() 將製作介面連線至攝影機的輸出內容時,攝影機的影格可用於建立影片。每個影格都必須有擷取影格時的呈現時間戳記,而非應用程式收到影格時的時間戳記。攝影機程式碼會設定緩衝區提供的時間戳記,因此時間戳記序列會更加一致。

個案研究:Grafika 的持續擷取功能

Grafika 的連續擷取功能會錄製裝置攝影機的影格,並在畫面上顯示這些影格。如要錄製影格,請使用

MediaCodec 類別的 createInputSurface() 方法建立介面,並將介面傳送至攝影機。如要顯示影格,請建立 SurfaceView 的例項,並將介面傳遞至 setPreviewDisplay()。請注意,同時錄製及顯示影格是較為複雜的程序。

連續擷取活動會顯示攝影機錄製的影片。在這種情況下,編碼後的影片會寫入記憶體中的循環緩衝區,隨時可儲存至磁碟。

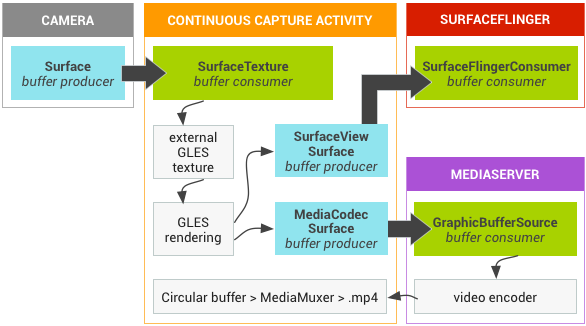

這個流程包含三個緩衝區佇列:

App:應用程式使用SurfaceTexture例項接收來自相機的影格,並將其轉換為外部 GLES 紋理。SurfaceFlinger:應用程式會宣告SurfaceView執行個體,以顯示影格。MediaServer:設定MediaCodec編碼器和輸入介面,即可建立影片。

下圖中的箭頭表示資料從攝影機傳播。系統會顯示 BufferQueue 執行個體,並以視覺指標區分生產者 (青色) 和消費者 (綠色)。

圖 1. Grafika 的持續擷取活動

編碼後的 H.264 影片會進入應用程式程序中的 RAM 循環緩衝區。

使用者按下擷取按鈕時,MediaMuxer 類別會將編碼的影片寫入磁碟上的 MP4 檔案。

應用程式中的所有 BufferQueue 執行個體都會以單一 EGL 環境處理,而 GLES 作業則會在 UI 執行緒上執行。編碼資料的處理作業 (管理循環緩衝區並寫入磁碟) 會在獨立執行緒上完成。

使用 SurfaceView 類別時,surfaceCreated() 回呼會為螢幕和影片編碼器建立 EGLContext 和 EGLSurface 例項。當新影格抵達時,

SurfaceTexture 會執行四項活動:

- 取得影格。

- 將影格設為可用的 GLES 紋理。

- 使用 GLES 指令算繪影格。

- 轉送每個

EGLSurface執行個體的轉換和時間戳記。

編碼器執行緒接著會從 MediaCodec

提取編碼輸出內容,並存放在記憶體中。

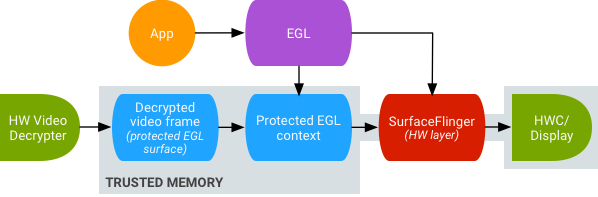

安全播放紋理影片

Android 支援對受保護的影片內容進行 GPU 後續處理。這項功能可讓應用程式使用 GPU 處理複雜的非線性影片效果 (例如扭曲)、將受保護的影片內容對應至紋理,以便在一般圖像場景中使用 (例如使用 GLES),以及處理虛擬實境 (VR)。

圖 2. 安全播放紋理影片

支援功能是透過下列兩項擴充功能啟用:

- EGL 擴充功能 -

(

EGL_EXT_protected_content) 可建立受保護的 GL 情境和介面,兩者都能處理受保護的內容。 - GLES 擴充功能 -

(

GL_EXT_protected_textures) 可將紋理標記為受保護,以便做為 Framebuffer 紋理附件使用。

即使視窗的 Surface 不會排入 SurfaceFlinger,Android 仍可讓 SurfaceTexture 和 ACodec (libstagefright.so) 傳送受保護的內容,並提供受保護的影片 Surface,供受保護的環境使用。方法是在受保護的環境中建立的介面 (由 ACodec 驗證) 上,設定受保護的消費者位元 (GRALLOC_USAGE_PROTECTED)。

安全紋理影片播放功能可為 OpenGL ES 環境中的強效數位著作權管理 (DRM) 實作奠定基礎。如果沒有強大的 DRM 實作方式 (例如 Widevine Level 1),許多內容供應商就不允許在 OpenGL ES 環境中算繪高價值內容,進而阻礙重要的 VR 用途,例如在 VR 中觀看受 DRM 保護的內容。

Android 開放原始碼計畫 (AOSP) 包含安全紋理影片播放的架構程式碼。驅動程式支援由原始設備製造商 (OEM) 提供。裝置實作人員必須實作 EGL_EXT_protected_content 和 GL_EXT_protected_textures 擴充功能。使用自己的轉碼器程式庫 (取代 libstagefright) 時,請注意 /frameworks/av/media/libstagefright/SurfaceUtils.cpp 中的變更,這些變更可讓標示為 GRALLOC_USAGE_PROTECTED 的緩衝區傳送至 ANativeWindow (即使 ANativeWindow 不會直接將緩衝區排入視窗合成器佇列),只要消費者使用位元包含 GRALLOC_USAGE_PROTECTED 即可。如需實作擴充功能的詳細說明文件,請參閱 Khronos 登錄檔 (

EGL_EXT_protected_content) 和 (

GL_EXT_protected_textures)。